MIT Expertise Overview’s What’s Subsequent collection appears throughout industries, tendencies, and applied sciences to provide you a primary take a look at the longer term. You may learn the remainder of them right here.

Due to the growth in synthetic intelligence, the world of chips is on the cusp of an enormous tidal shift. There may be heightened demand for chips that may prepare AI fashions quicker and ping them from units like smartphones and satellites, enabling us to make use of these fashions with out disclosing non-public information. Governments, tech giants, and startups alike are racing to carve out their slices of the rising semiconductor pie.

Listed below are 4 tendencies to search for within the 12 months forward that may outline what the chips of the longer term will appear like, who will make them, and which new applied sciences they’ll unlock.

CHIPS Acts world wide

On the outskirts of Phoenix, two of the world’s largest chip producers, TSMC and Intel, are racing to assemble campuses within the desert that they hope will grow to be the seats of American chipmaking prowess. One factor the efforts have in widespread is their funding: in March, President Joe Biden introduced $8.5 billion in direct federal funds and $11 billion in loans for Intel’s expansions across the nation. Weeks later, one other $6.6 billion was introduced for TSMC.

The awards are only a portion of the US subsidies pouring into the chips business through the $280 billion CHIPS and Science Act signed in 2022. The cash implies that any firm with a foot within the semiconductor ecosystem is analyzing restructure its provide chains to learn from the money. Whereas a lot of the cash goals to spice up American chip manufacturing, there’s room for different gamers to use, from tools makers to area of interest supplies startups.

However the US is just not the one nation attempting to onshore a few of the chipmaking provide chain. Japan is spending $13 billion by itself equal to the CHIPS Act, Europe will probably be spending greater than $47 billion, and earlier this 12 months India introduced a $15 billion effort to construct native chip vegetation. The roots of this development go all the best way again to 2014, says Chris Miller, a professor at Tufts College and writer of Chip Conflict: The Combat for the World’s Most Important Expertise. That’s when China began providing large subsidies to its chipmakers.

“This created a dynamic wherein different governments concluded that they had no selection however to supply incentives or see companies shift manufacturing to China,” he says. That menace, coupled with the surge in AI, has led Western governments to fund options. Within the subsequent 12 months, this may need a snowball impact, with much more international locations beginning their very own packages for concern of being left behind.

The cash is unlikely to result in brand-new chip rivals or basically restructure who the largest chip gamers are, Miller says. As an alternative, it’s going to principally incentivize dominant gamers like TSMC to ascertain roots in a number of international locations. However funding alone received’t be sufficient to try this shortly—TSMC’s effort to construct vegetation in Arizona has been mired in missed deadlines and labor disputes, and Intel has equally failed to fulfill its promised deadlines. And it’s unclear whether or not, each time the vegetation do come on-line, their tools and labor drive will probably be able to the identical degree of superior chipmaking that the businesses preserve overseas.

“The availability chain will solely shift slowly, over years and many years,” Miller says. “However it’s shifting.”

Extra AI on the sting

Presently, most of our interactions with AI fashions like ChatGPT are executed through the cloud. That implies that whenever you ask GPT to pick an outfit (or to be your boyfriend), your request pings OpenAI’s servers, prompting the mannequin housed there to course of it and draw conclusions (often called “inference”) earlier than a response is shipped again to you. Counting on the cloud has some drawbacks: it requires web entry, for one, and it additionally means a few of your information is shared with the mannequin maker.

That’s why there’s been lots of curiosity and funding in edge computing for AI, the place the method of pinging the AI mannequin occurs immediately in your system, like a laptop computer or smartphone. With the business more and more working towards a future wherein AI fashions know so much about us (Sam Altman described his killer AI app to me as one which is aware of “completely the whole lot about my complete life, each e-mail, each dialog I’ve ever had”), there’s a requirement for quicker “edge” chips that may run fashions with out sharing non-public information. These chips face completely different constraints from those in information facilities: they sometimes should be smaller, cheaper, and extra power environment friendly.

The US Division of Protection is funding lots of analysis into quick, non-public edge computing. In March, its analysis wing, the Protection Superior Analysis Initiatives Company (DARPA), introduced a partnership with chipmaker EnCharge AI to create an ultra-powerful edge computing chip used for AI inference. EnCharge AI is working to make a chip that permits enhanced privateness however can even function on little or no energy. It will make it appropriate for army purposes like satellites and off-grid surveillance tools. The corporate expects to ship the chips in 2025.

AI fashions will all the time depend on the cloud for some purposes, however new funding and curiosity in bettering edge computing might convey quicker chips, and subsequently extra AI, to our on a regular basis units. If edge chips get small and low cost sufficient, we’re prone to see much more AI-driven “sensible units” in our houses and workplaces. Right this moment, AI fashions are principally constrained to information facilities.

“Lots of the challenges that we see within the information middle will probably be overcome,” says EnCharge AI cofounder Naveen Verma. “I count on to see an enormous deal with the sting. I believe it’s going to be essential to getting AI at scale.”

Massive Tech enters the chipmaking fray

In industries starting from quick style to garden care, firms are paying exorbitant quantities in computing prices to create and prepare AI fashions for his or her companies. Examples embrace fashions that staff can use to scan and summarize paperwork, in addition to externally dealing with applied sciences like digital brokers that may stroll you thru restore your damaged fridge. Which means demand for cloud computing to coach these fashions is thru the roof.

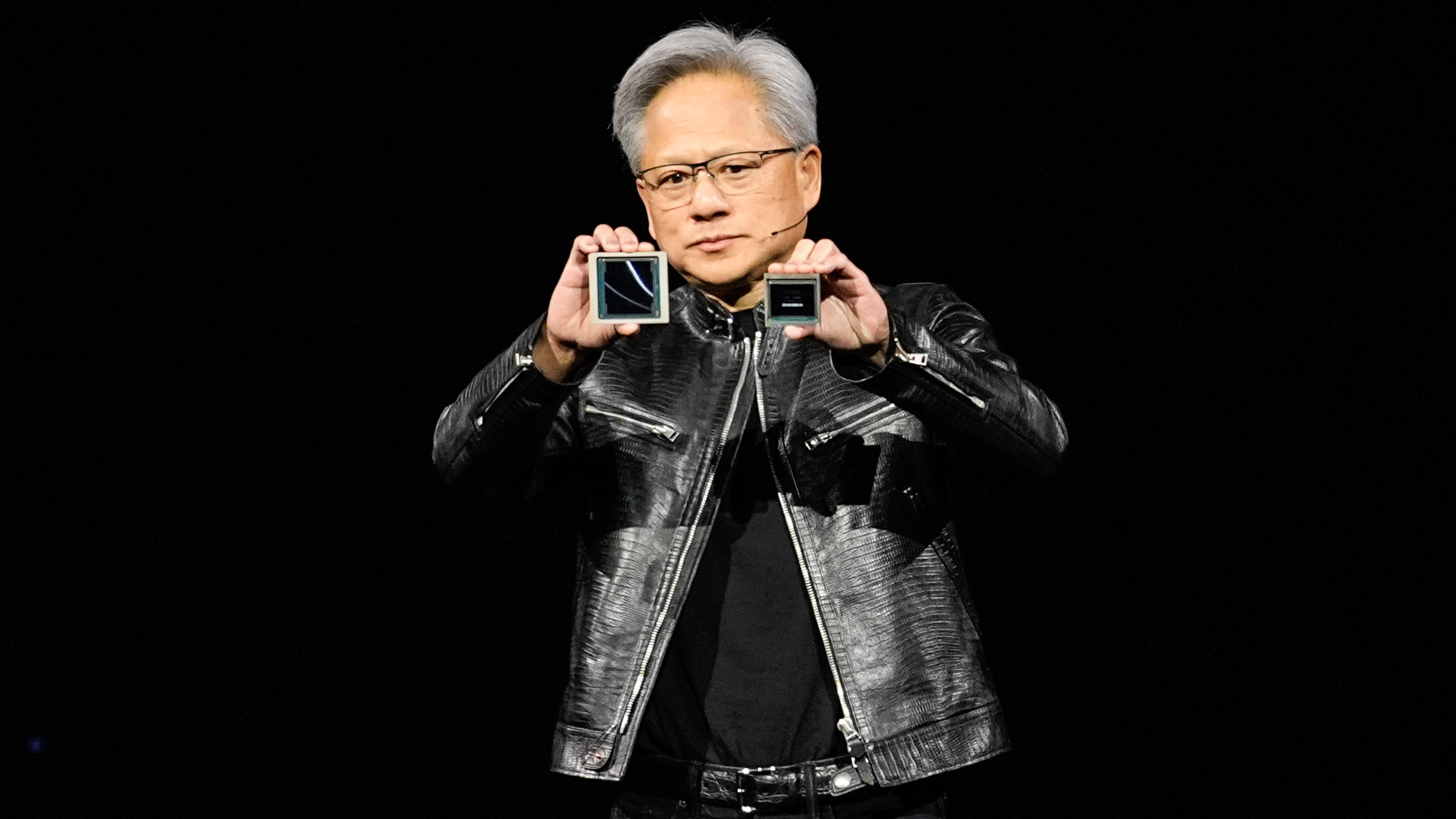

The businesses offering the majority of that computing energy are Amazon, Microsoft, and Google. For years these tech giants have dreamed of accelerating their revenue margins by making chips for his or her information facilities in-house slightly than shopping for from firms like Nvidia, an enormous with a close to monopoly on essentially the most superior AI coaching chips and a worth bigger than the GDP of 183 international locations.

Amazon began its effort in 2015, buying startup Annapurna Labs. Google moved subsequent in 2018 with its personal chips referred to as TPUs. Microsoft launched its first AI chips in November, and Meta unveiled a brand new model of its personal AI coaching chips in April.

That development might tilt the scales away from Nvidia. However Nvidia doesn’t solely play the function of rival within the eyes of Massive Tech: no matter their very own in-house efforts, cloud giants nonetheless want its chips for his or her information facilities. That’s partly as a result of their very own chipmaking efforts can’t fulfill all their wants, nevertheless it’s additionally as a result of their clients count on to have the ability to use top-of-the-line Nvidia chips.

“That is actually about giving the purchasers the selection,” says Rani Borkar, who leads {hardware} efforts at Microsoft Azure. She says she will’t envision a future wherein Microsoft provides all chips for its cloud companies: “We’ll proceed our sturdy partnerships and deploy chips from all of the silicon companions that we work with.”

As cloud computing giants try and poach a little bit of market share away from chipmakers, Nvidia can be trying the converse. Final 12 months the corporate began its personal cloud service so clients can bypass Amazon, Google, or Microsoft and get computing time on Nvidia chips immediately. As this dramatic wrestle over market share unfolds, the approaching 12 months will probably be about whether or not clients see Massive Tech’s chips as akin to Nvidia’s most superior chips, or extra like their little cousins.

Nvidia battles the startups

Regardless of Nvidia’s dominance, there’s a wave of funding flowing towards startups that purpose to outcompete it in sure slices of the chip market of the longer term. These startups all promise quicker AI coaching, however they’ve completely different concepts about which flashy computing know-how will get them there, from quantum to photonics to reversible computation.

However Murat Onen, the 28-year-old founder of 1 such chip startup, Eva, which he spun out of his PhD work at MIT, is blunt about what it’s like to begin a chip firm proper now.

“The king of the hill is Nvidia, and that’s the world that we reside in,” he says.

Many of those firms, like SambaNova, Cerebras, and Graphcore, try to alter the underlying structure of chips. Think about an AI accelerator chip as consistently having to shuffle information backwards and forwards between completely different areas: a bit of data is saved within the reminiscence zone however should transfer to the processing zone, the place a calculation is made, after which be saved again to the reminiscence zone for safekeeping. All that takes time and power.

Making that course of extra environment friendly would ship quicker and cheaper AI coaching to clients, however provided that the chipmaker has ok software program to permit the AI coaching firm to seamlessly transition to the brand new chip. If the software program transition is just too clunky, mannequin makers akin to OpenAI, Anthropic, and Mistral are prone to follow big-name chipmakers.Which means firms taking this strategy, like SambaNova, are spending lots of their time not simply on chip design however on software program design too.

Onen is proposing modifications one degree deeper. As an alternative of conventional transistors, which have delivered better effectivity over many years by getting smaller and smaller, he’s utilizing a brand new part referred to as a proton-gated transistor that he says Eva designed particularly for the mathematical wants of AI coaching. It permits units to retailer and course of information in the identical place, saving time and computing power. The thought of utilizing such a part for AI inference dates again to the 1960s, however researchers might by no means determine use it for AI coaching, partially due to a supplies roadblock—it requires a cloth that may, amongst different qualities, exactly management conductivity at room temperature.

At some point within the lab, “by way of optimizing these numbers, and getting very fortunate, we acquired the fabric that we wished,” Onen says. “Swiftly, the system is just not a science honest venture.” That raised the potential of utilizing such a part at scale. After months of working to substantiate that the info was appropriate, he based Eva, and the work was printed in Science.

However in a sector the place so many founders have promised—and failed—to topple the dominance of the main chipmakers, Onen frankly admits that it is going to be years earlier than he’ll know if the design works as meant and if producers will agree to provide it. Main an organization by way of that uncertainty, he says, requires flexibility and an urge for food for skepticism from others.

“I believe typically folks really feel too connected to their concepts, after which sort of really feel insecure that if this goes away there received’t be something subsequent,” he says. “I don’t assume I really feel that means. I’m nonetheless in search of folks to problem us and say that is fallacious.”