Self-driving firm Waabi is utilizing a generative AI mannequin to assist predict the motion of automobiles, it introduced as we speak.

The brand new system, known as Copilot4D, was skilled on troves of knowledge from lidar sensors, which use mild to sense how distant objects are. Should you immediate the mannequin with a state of affairs, like a driver recklessly merging onto a freeway at excessive velocity, it predicts how the encompassing automobiles will transfer, then generates a lidar illustration of 5 to 10 seconds into the longer term (displaying a pileup, maybe). As we speak’s announcement is concerning the preliminary model of Copilot4D, however Waabi CEO Raquel Urtasun says a extra superior and interpretable model is deployed in Waabi’s testing fleet of autonomous vehicles in Texas that helps the driving software program resolve the right way to react.

Whereas autonomous driving has lengthy relied on machine studying to plan routes and detect objects, some corporations and researchers are actually betting that generative AI — fashions that absorb knowledge of their environment and generate predictions — will assist carry autonomy to the subsequent stage. Wayve, a Waabi competitor, launched a comparable mannequin final yr that’s skilled on the video that its automobiles accumulate.

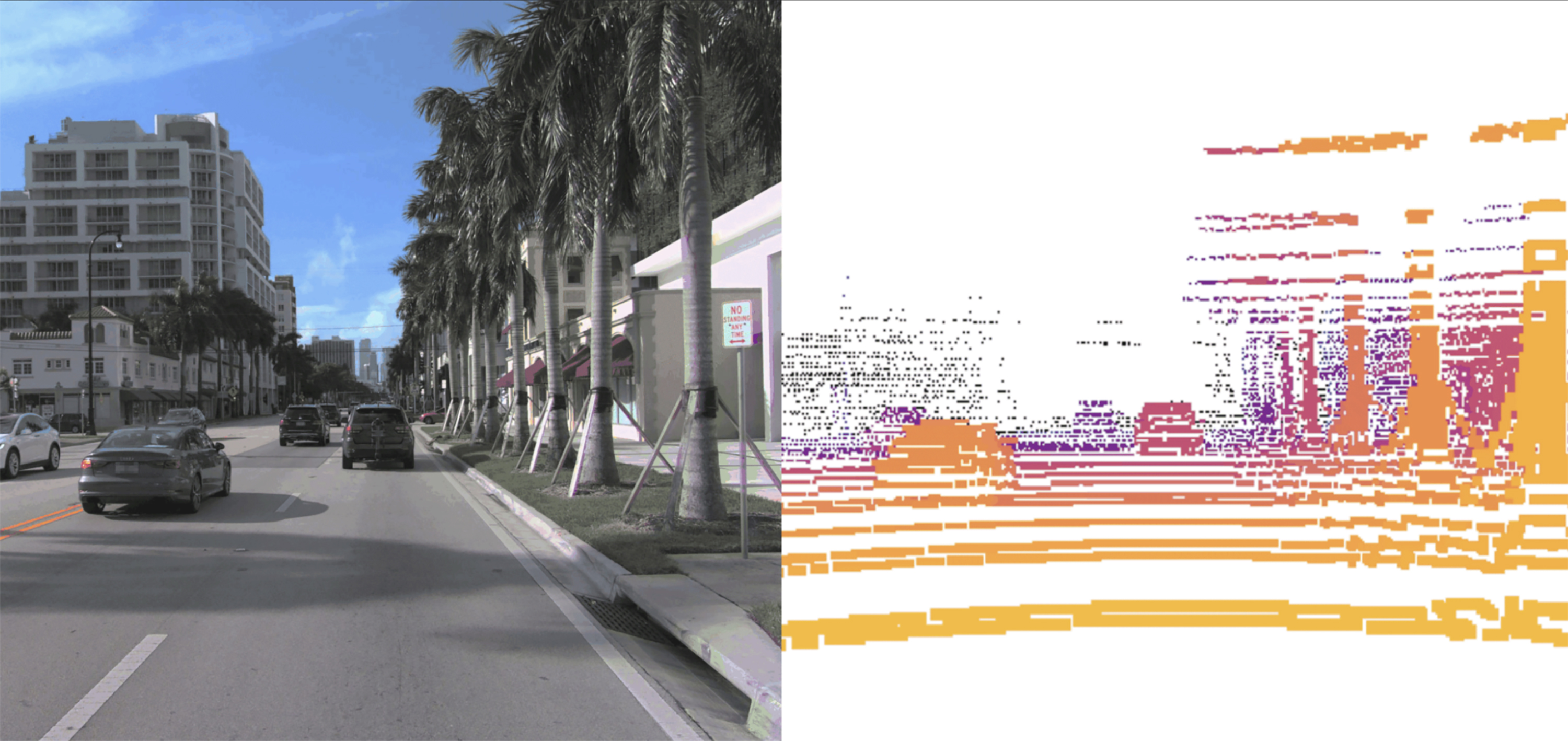

Waabi’s mannequin works in the same solution to picture or video mills like OpenAI’s DALL-E and Sora. It takes level clouds of lidar knowledge, which visualize a 3D map of the automobile’s environment, and breaks them into chunks, just like how picture mills break photographs into pixels. Based mostly on its coaching knowledge, Copilot4D then predicts how all factors of lidar knowledge will transfer. Doing this constantly permits it to generate predictions 5-10 seconds into the longer term.

Waabi is one in every of a handful of autonomous driving corporations, together with rivals Wayve and Ghost, that describe their strategy as “AI-first.” To Urtasun, which means designing a system that learns from knowledge, moderately than one which should be taught reactions to particular conditions. The cohort is betting their strategies may require fewer hours of road-testing self-driving automobiles, a charged subject following an October 2023 accident the place a Cruise robotaxi dragged a pedestrian in San Francisco.

Waabi is completely different from its rivals in constructing a generative mannequin for lidar, moderately than cameras.

“If you wish to be a Stage four participant, lidar is a should,” says Urtasun, referring to the automation stage the place the automobile doesn’t require the eye of a human to drive safely. Cameras do a very good job of displaying what the automobile is seeing, however they’re not as adept at measuring distances or understanding the geometry of the automobile’s environment, she says.

Although Waabi’s mannequin can generate movies displaying what a automobile will see by means of its lidar sensors, these movies is not going to be used as coaching within the firm’s driving simulator that it makes use of to construct and check its driving mannequin. That’s to make sure any hallucinations arising from Copilot4D don’t get taught within the simulator.

The underlying expertise isn’t new, says Bernard Adam Lange, a PhD scholar at Stanford who has constructed and researched comparable fashions, but it surely’s the primary time he’s seen a generative lidar mannequin depart the confines of a analysis lab and be scaled up for industrial use. A mannequin like this is able to typically assist make the “mind” of any autonomous car capable of motive extra shortly and precisely, he says.

“It’s the scale that’s transformative,” he says. “The hope is that these fashions might be utilized in downstream duties” like detecting objects and predicting the place individuals or issues may transfer subsequent.

Copilot4D can solely estimate to this point into the longer term, and movement prediction fashions normally degrade the farther they’re requested to challenge ahead. Urtasun says that the mannequin solely must think about what occurs 5 to 10 seconds forward for almost all of driving selections, although the benchmark exams highlighted by Waabi are based mostly on 3-second predictions. Chris Gerdes, co-director of Stanford’s Heart for Automotive Analysis, says this metric shall be key in figuring out how helpful the mannequin is at making selections.

“If the 5-second predictions are strong however the 10-second predictions are simply barely usable, there are a variety of conditions the place this is able to not be adequate on the highway,” he says.

The brand new mannequin resurfaces a query rippling by means of the world of generative AI: whether or not or to not make fashions open-source. Releasing Copilot4D would let tutorial researchers, who battle with entry to massive knowledge units, peek beneath the hood at the way it’s made, independently consider security, and probably advance the sector. It will additionally do the identical for Waabi’s rivals. Waabi has printed a paper detailing the creation of the mannequin however has not launched the code, and Urtasun is not sure if they’ll.

“We would like academia to even have a say in the way forward for self-driving,” she says, including that open-source fashions are extra trusted. “However we additionally must be a bit cautious as we develop our expertise in order that we don’t unveil all the pieces to our rivals.”