A brand new software lets artists add invisible modifications to the pixels of their artwork earlier than they add it on-line in order that if it’s scraped into an AI coaching set, it will probably trigger the ensuing mannequin to interrupt in chaotic and unpredictable methods.

The software, referred to as Nightshade, is meant as a option to struggle again in opposition to AI corporations that use artists’ work to coach their fashions with out the creator’s permission. Utilizing it to “poison” this coaching knowledge might injury future iterations of image-generating AI fashions, comparable to DALL-E, Midjourney, and Secure Diffusion, by rendering a few of their outputs ineffective—canine grow to be cats, automobiles grow to be cows, and so forth. MIT Expertise Evaluate bought an unique preview of the analysis, which has been submitted for peer evaluate at laptop safety convention Usenix.

AI corporations comparable to OpenAI, Meta, Google, and Stability AI are dealing with a slew of lawsuits from artists who declare that their copyrighted materials and private data was scraped with out consent or compensation. Ben Zhao, a professor on the College of Chicago, who led the workforce that created Nightshade, says the hope is that it’s going to assist tip the ability steadiness again from AI corporations in the direction of artists, by creating a strong deterrent in opposition to disrespecting artists’ copyright and mental property. Meta, Google, Stability AI, and OpenAI didn’t reply to MIT Expertise Evaluate’s request for touch upon how they may reply.

Zhao’s workforce additionally developed Glaze, a software that enables artists to “masks” their very own private fashion to forestall it from being scraped by AI corporations. It really works in an identical option to Nightshade: by altering the pixels of photographs in refined methods which are invisible to the human eye however manipulate machine-learning fashions to interpret the picture as one thing completely different from what it truly exhibits.

The workforce intends to combine Nightshade into Glaze, and artists can select whether or not they wish to use the data-poisoning software or not. The workforce can be making Nightshade open supply, which might enable others to tinker with it and make their very own variations. The extra individuals use it and make their very own variations of it, the extra highly effective the software turns into, Zhao says. The info units for big AI fashions can encompass billions of photographs, so the extra poisoned photographs may be scraped into the mannequin, the extra injury the method will trigger.

A focused assault

Nightshade exploits a safety vulnerability in generative AI fashions, one arising from the truth that they’re skilled on huge quantities of information—on this case, photographs which have been hoovered from the web. Nightshade messes with these photographs.

Artists who wish to add their work on-line however don’t need their photographs to be scraped by AI corporations can add them to Glaze and select to masks it with an artwork fashion completely different from theirs. They will then additionally decide to make use of Nightshade. As soon as AI builders scrape the web to get extra knowledge to tweak an current AI mannequin or construct a brand new one, these poisoned samples make their method into the mannequin’s knowledge set and trigger it to malfunction.

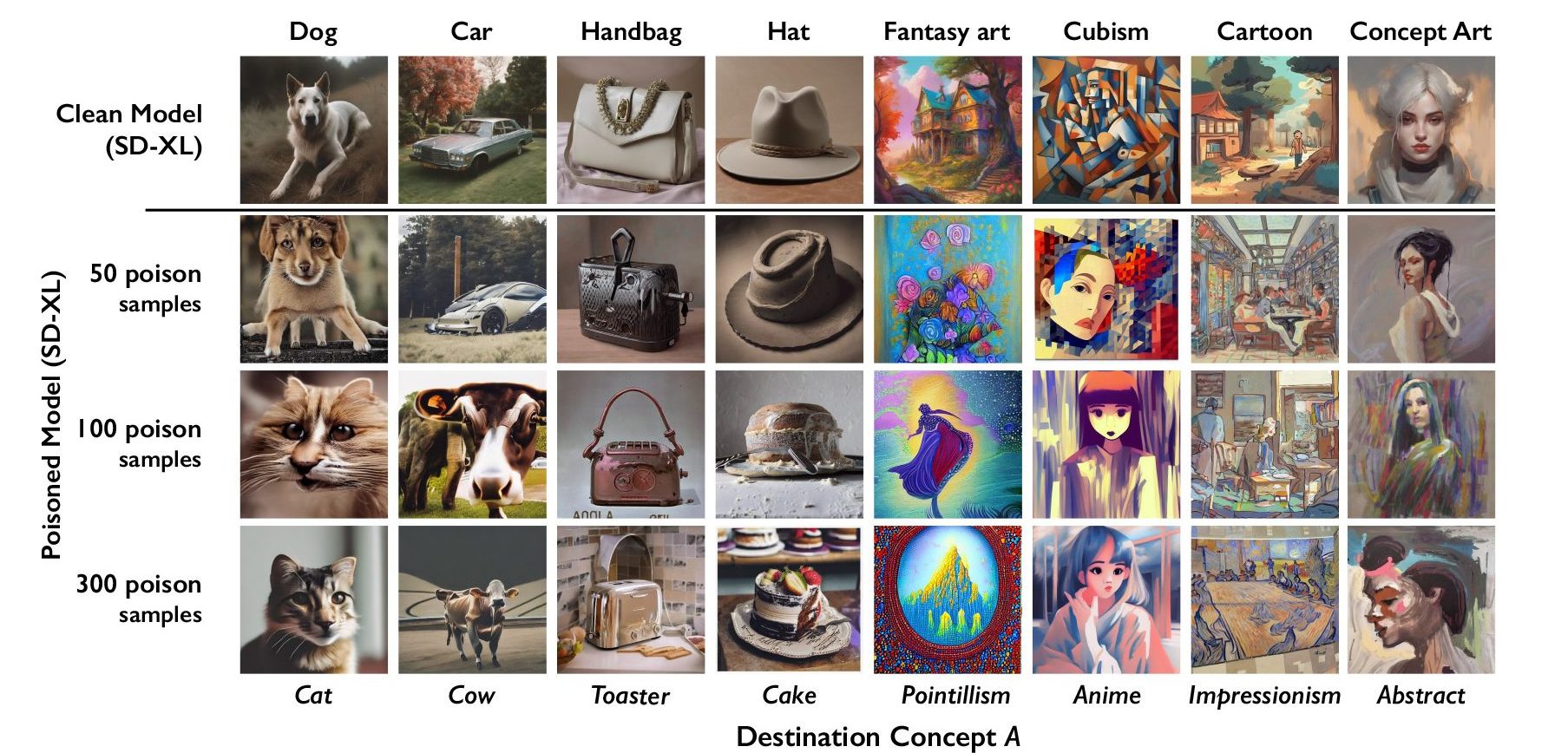

Poisoned knowledge samples can manipulate fashions into studying, for instance, that photographs of hats are desserts, and pictures of purses are toasters. The poisoned knowledge may be very tough to take away, because it requires tech corporations to painstakingly discover and delete every corrupted pattern.

The researchers examined the assault on Secure Diffusion’s newest fashions and on an AI mannequin they skilled themselves from scratch. After they fed Secure Diffusion simply 50 poisoned photographs of canine after which prompted it to create photographs of canine itself, the output began wanting bizarre—creatures with too many limbs and cartoonish faces. With 300 poisoned samples, an attacker can manipulate Secure Diffusion to generate photographs of canine to seem like cats.

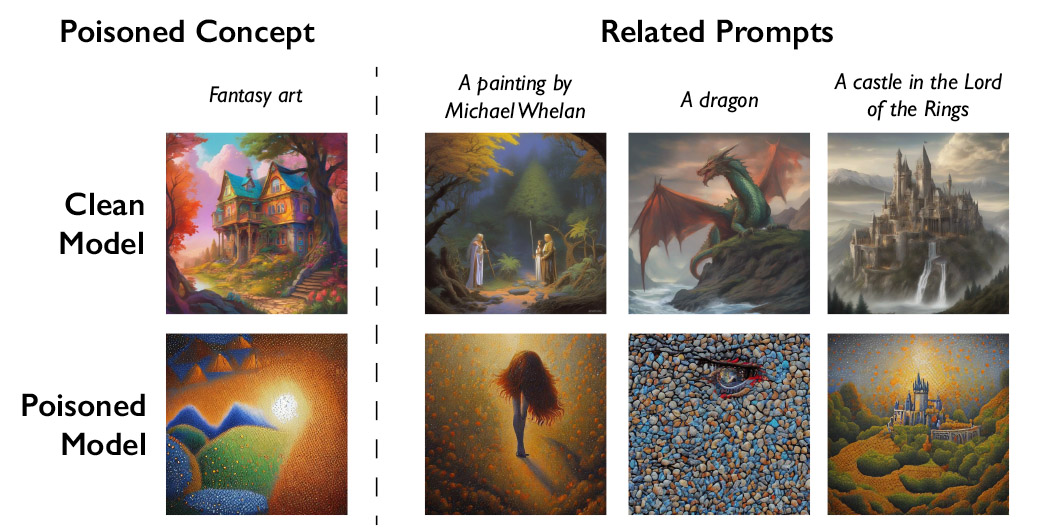

Generative AI fashions are wonderful at making connections between phrases, which helps the poison unfold. Nightshade infects not solely the phrase “canine” however all comparable ideas, comparable to “pet,” “husky,” and “wolf.” The poison assault additionally works on tangentially associated photographs. For instance, if the mannequin scraped a poisoned picture for the immediate “fantasy artwork,” the prompts “dragon” and “a fortress in The Lord of the Rings” would equally be manipulated into one thing else.

Zhao admits there’s a danger that folks would possibly abuse the information poisoning method for malicious makes use of. Nonetheless, he says attackers would want hundreds of poisoned samples to inflict actual injury on bigger, extra highly effective fashions, as they’re skilled on billions of information samples.

“We don’t but know of sturdy defenses in opposition to these assaults. We haven’t but seen poisoning assaults on trendy [machine learning] fashions within the wild, nevertheless it may very well be only a matter of time,” says Vitaly Shmatikov, a professor at Cornell College who research AI mannequin safety and was not concerned within the analysis. “The time to work on defenses is now,” Shmatikov provides.

Gautam Kamath, an assistant professor on the College of Waterloo who researches knowledge privateness and robustness in AI fashions and wasn’t concerned within the research, says the work is “incredible.”

The analysis exhibits that vulnerabilities “don’t magically go away for these new fashions, and in reality solely grow to be extra critical,” Kamath says. “That is very true as these fashions grow to be extra highly effective and other people place extra belief in them, for the reason that stakes solely rise over time.”

A strong deterrent

Junfeng Yang, a pc science professor at Columbia College, who has studied the safety of deep-learning methods and wasn’t concerned within the work, says Nightshade might have a big effect if it makes AI corporations respect artists’ rights extra—for instance, by being extra prepared to pay out royalties.

AI corporations which have developed generative text-to-image fashions, comparable to Stability AI and OpenAI, have supplied to let artists decide out of getting their photographs used to coach future variations of the fashions. However artists say this isn’t sufficient. Eva Toorenent, an illustrator and artist who has used Glaze, says opt-out insurance policies require artists to leap by means of hoops and nonetheless depart tech corporations with all the ability.

Toorenent hopes Nightshade will change the established order.

“It’s going to make [AI companies] suppose twice, as a result of they’ve the opportunity of destroying their whole mannequin by taking our work with out our consent,” she says.

Autumn Beverly, one other artist, says instruments like Nightshade and Glaze have given her the boldness to put up her work on-line once more. She beforehand eliminated it from the web after discovering it had been scraped with out her consent into the favored LAION picture database.

“I’m simply actually grateful that we’ve got a software that may assist return the ability again to the artists for their very own work,” she says.