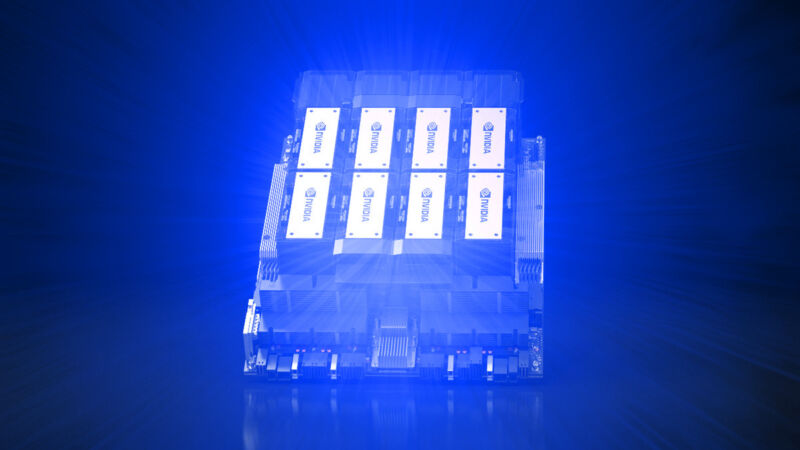

Enlarge / The Nvidia H200 GPU lined with a whimsical blue explosion that figuratively represents uncooked compute energy bursting forth in a glowing flurry. (credit score: Nvidia | Benj Edwards)

On Monday, Nvidia introduced the HGX H200 Tensor Core GPU, which makes use of the Hopper structure to speed up AI functions. It is a follow-up of the H100 GPU, launched final yr and beforehand Nvidia’s strongest AI GPU chip. If broadly deployed, it might result in way more highly effective AI fashions—and sooner response occasions for present ones like ChatGPT—within the close to future.

In keeping with consultants, lack of computing energy (usually known as “compute”) has been a serious bottleneck of AI progress this previous yr, hindering deployments of present AI fashions and slowing the event of latest ones. Shortages of highly effective GPUs that speed up AI fashions are largely accountable. One approach to alleviate the compute bottleneck is to make extra chips, however you may also make AI chips extra highly effective. That second method could make the H200 a lovely product for cloud suppliers.

What is the H200 good for? Regardless of the “G” within the “GPU” title, information middle GPUs like this sometimes aren’t for graphics. GPUs are perfect for AI functions as a result of they carry out huge numbers of parallel matrix multiplications, that are essential for neural networks to perform. They’re important within the coaching portion of constructing an AI mannequin and the “inference” portion, the place individuals feed inputs into an AI mannequin and it returns outcomes.

Learn 7 remaining paragraphs | Feedback