David Chalmers was not anticipating the invitation he obtained in September of final yr. As a number one authority on consciousness, Chalmers frequently circles the world delivering talks at universities and educational conferences to rapt audiences of philosophers—the kind of people that may spend hours debating whether or not the world exterior their very own heads is actual after which go blithely about the remainder of their day. This newest request, although, got here from a shocking supply: the organizers of the Convention on Neural Data Processing Methods (NeurIPS), a yearly gathering of the brightest minds in synthetic intelligence.

Lower than six months earlier than the convention, an engineer named Blake Lemoine, then at Google, had gone public along with his rivalry that LaMDA, one of many firm’s AI methods, had achieved consciousness. Lemoine’s claims had been rapidly dismissed within the press, and he was summarily fired, however the genie wouldn’t return to the bottle fairly so simply—particularly after the discharge of ChatGPT in November 2022. All of a sudden it was doable for anybody to hold on a classy dialog with a well mannered, artistic synthetic agent.

Chalmers was an eminently good selection to discuss AI consciousness. He’d earned his PhD in philosophy at an Indiana College AI lab, the place he and his pc scientist colleagues spent their breaks debating whether or not machines may in the future have minds. In his 1996 guide, The Acutely aware Thoughts, he spent a complete chapter arguing that synthetic consciousness was doable.

If he had been capable of work together with methods like LaMDA and ChatGPT again within the ’90s, earlier than anybody knew how such a factor may work, he would have thought there was a great probability they had been aware, Chalmers says. However when he stood earlier than a crowd of NeurIPS attendees in a cavernous New Orleans conference corridor, clad in his trademark leather-based jacket, he provided a special evaluation. Sure, massive language fashions—methods which were skilled on huge corpora of textual content in an effort to mimic human writing as precisely as doable—are spectacular. However, he mentioned, they lack too lots of the potential requisites for consciousness for us to consider that they really expertise the world.

“Consciousness poses a novel problem in our makes an attempt to check it, as a result of it’s onerous to outline.”

Liad Mudrik, neuroscientist, Tel Aviv College

On the breakneck tempo of AI growth, nevertheless, issues can shift abruptly. For his mathematically minded viewers, Chalmers obtained concrete: the probabilities of growing any aware AI within the subsequent 10 years had been, he estimated, above one in 5.

Not many individuals dismissed his proposal as ridiculous, Chalmers says: “I imply, I’m positive some folks had that response, however they weren’t those speaking to me.” As an alternative, he spent the subsequent a number of days in dialog after dialog with AI consultants who took the chances he’d described very severely. Some got here to Chalmers effervescent with enthusiasm on the idea of aware machines. Others, although, had been horrified at what he had described. If an AI had been aware, they argued—if it might look out on the world from its personal private perspective, not merely processing inputs but additionally experiencing them—then, maybe, it might endure.

AI consciousness isn’t only a devilishly difficult mental puzzle; it’s a morally weighty downside with doubtlessly dire penalties. Fail to determine a aware AI, and also you may unintentionally subjugate, and even torture, a being whose pursuits should matter. Mistake an unconscious AI for a aware one, and also you danger compromising human security and happiness for the sake of an unthinking, unfeeling hunk of silicon and code. Each errors are straightforward to make. “Consciousness poses a novel problem in our makes an attempt to check it, as a result of it’s onerous to outline,” says Liad Mudrik, a neuroscientist at Tel Aviv College who has researched consciousness because the early 2000s. “It’s inherently subjective.”

Over the previous few many years, a small analysis group has doggedly attacked the query of what consciousness is and the way it works. The trouble has yielded actual progress on what as soon as appeared an unsolvable downside. Now, with the speedy advance of AI know-how, these insights might supply our solely information to the untested, morally fraught waters of synthetic consciousness.

“If we as a subject will have the ability to use the theories that we now have, and the findings that we now have, in an effort to attain a great take a look at for consciousness,” Mudrik says, “it would in all probability be probably the most necessary contributions that we might give.”

When Mudrik explains her consciousness analysis, she begins with certainly one of her very favourite issues: chocolate. Putting a chunk in your mouth sparks a symphony of neurobiological occasions—your tongue’s sugar and fats receptors activate brain-bound pathways, clusters of cells within the mind stem stimulate your salivary glands, and neurons deep inside your head launch the chemical dopamine. None of these processes, although, captures what it’s wish to snap a chocolate sq. from its foil packet and let it soften in your mouth. “What I’m attempting to know is what within the mind permits us not solely to course of info—which in its personal proper is a formidable problem and an incredible achievement of the mind—but additionally to expertise the knowledge that we’re processing,” Mudrik says.

Finding out info processing would have been the extra simple alternative for Mudrik, professionally talking. Consciousness has lengthy been a marginalized subject in neuroscience, seen as at finest unserious and at worst intractable. “A captivating however elusive phenomenon,” reads the “Consciousness” entry within the 1996 version of the Worldwide Dictionary of Psychology. “Nothing value studying has been written on it.”

Mudrik was not dissuaded. From her undergraduate years within the early 2000s, she knew that she didn’t wish to analysis something apart from consciousness. “It may not be essentially the most wise determination to make as a younger researcher, however I simply couldn’t assist it,” she says. “I couldn’t get sufficient of it.” She earned two PhDs—one in neuroscience, one in philosophy—in her willpower to decipher the character of human expertise.

As slippery a subject as consciousness may be, it isn’t unattainable to pin down—put as merely as doable, it’s the power to expertise issues. It’s typically confused with phrases like “sentience” and “self-awareness,” however in keeping with the definitions that many consultants use, consciousness is a prerequisite for these different, extra refined skills. To be sentient, a being should have the ability to have optimistic and adverse experiences—in different phrases, pleasures and pains. And being self-aware means not solely having an expertise but additionally understanding that you’re having an expertise.

In her laboratory, Mudrik doesn’t fear about sentience and self-consciousness; she’s fascinated by observing what occurs within the mind when she manipulates folks’s aware expertise. That’s a straightforward factor to do in precept. Give somebody a chunk of broccoli to eat, and the expertise might be very totally different from consuming a chunk of chocolate—and can in all probability lead to a special mind scan. The issue is that these variations are uninterpretable. It could be unattainable to discern that are linked to adjustments in info—broccoli and chocolate activate very totally different style receptors—and which characterize adjustments within the aware expertise.

The trick is to switch the expertise with out modifying the stimulus, like giving somebody a chunk of chocolate after which flipping a swap to make it really feel like consuming broccoli. That’s not doable with style, however it’s with imaginative and prescient. In a single broadly used method, scientists have folks have a look at two totally different photos concurrently, one with every eye. Though the eyes absorb each photos, it’s unattainable to understand each directly, so topics will typically report that their visible expertise “flips”: first they see one picture, after which, spontaneously, they see the opposite. By monitoring mind exercise throughout these flips in aware consciousness, scientists can observe what occurs when incoming info stays the identical however the expertise of it shifts.

With these and different approaches, Mudrik and her colleagues have managed to ascertain some concrete details about how consciousness works within the human mind. The cerebellum, a mind area on the base of the cranium that resembles a fist-size tangle of angel-hair pasta, seems to play no position in aware expertise, although it’s essential for unconscious motor duties like driving a motorcycle; then again, suggestions connections—for instance, connections working from the “greater,” cognitive areas of the mind to these concerned in additional fundamental sensory processing—appear important to consciousness. (This, by the way in which, is one good motive to doubt the consciousness of LLMs: they lack substantial suggestions connections.)

A decade in the past, a bunch of Italian and Belgian neuroscientists managed to plot a take a look at for human consciousness that makes use of transcranial magnetic stimulation (TMS), a noninvasive type of mind stimulation that’s utilized by holding a figure-eight-shaped magnetic wand close to somebody’s head. Solely from the ensuing patterns of mind exercise, the group was capable of distinguish aware folks from those that had been beneath anesthesia or deeply asleep, they usually might even detect the distinction between a vegetative state (the place somebody is awake however not aware) and locked-in syndrome (wherein a affected person is aware however can’t transfer in any respect).

That’s an infinite step ahead in consciousness analysis, however it means little for the query of aware AI: OpenAI’s GPT fashions don’t have a mind that may be stimulated by a TMS wand. To check for AI consciousness, it’s not sufficient to determine the buildings that give rise to consciousness within the human mind. You have to know why these buildings contribute to consciousness, in a means that’s rigorous and normal sufficient to be relevant to any system, human or in any other case.

“Finally, you want a concept,” says Christof Koch, former president of the Allen Institute and an influential consciousness researcher. “You possibly can’t simply rely in your intuitions anymore; you want a foundational concept that tells you what consciousness is, the way it will get into the world, and who has it and who doesn’t.”

Right here’s one concept about how that litmus take a look at for consciousness may work: any being that’s clever sufficient, that’s able to responding efficiently to a large sufficient number of contexts and challenges, have to be aware. It’s not an absurd concept on its face. We people have essentially the most clever brains round, so far as we’re conscious, and we’re undoubtedly aware. Extra clever animals, too, appear extra prone to be aware—there’s way more consensus that chimpanzees are aware than, say, crabs.

However consciousness and intelligence should not the identical. When Mudrik flashes photos at her experimental topics, she’s not asking them to ponder something or testing their problem-solving skills. Even a crab scuttling throughout the ocean flooring, with no consciousness of its previous or ideas about its future, would nonetheless be aware if it might expertise the pleasure of a tasty morsel of shrimp or the ache of an injured claw.

Susan Schneider, director of the Middle for the Future Thoughts at Florida Atlantic College, thinks that AI might attain better heights of intelligence by forgoing consciousness altogether. Acutely aware processes like holding one thing in short-term reminiscence are fairly restricted—we will solely take note of a few issues at a time and infrequently battle to do easy duties like remembering a cellphone quantity lengthy sufficient to name it. It’s not instantly apparent what an AI would achieve from consciousness, particularly contemplating the spectacular feats such methods have been capable of obtain with out it.

As additional iterations of GPT show themselves an increasing number of clever—an increasing number of able to assembly a broad spectrum of calls for, from acing the bar examination to constructing an internet site from scratch—their success, in and of itself, can’t be taken as proof of their consciousness. Even a machine that behaves indistinguishably from a human isn’t essentially conscious of something in any respect.

Understanding how an AI works on the within might be a vital step towards figuring out whether or not or not it’s aware.

Schneider, although, hasn’t misplaced hope in exams. Along with the Princeton physicist Edwin Turner, she has formulated what she calls the “synthetic consciousness take a look at.” It’s not straightforward to carry out: it requires isolating an AI agent from any details about consciousness all through its coaching. (That is necessary in order that it could’t, like LaMDA, simply parrot human statements about consciousness.) Then, as soon as the system is skilled, the tester asks it questions that it might solely reply if it knew about consciousness—data it might solely have acquired from being aware itself. Can it perceive the plot of the movie Freaky Friday, the place a mom and daughter swap our bodies, their consciousnesses dissociated from their bodily selves? Does it grasp the idea of dreaming—and even report dreaming itself? Can it conceive of reincarnation or an afterlife?

There’s an enormous limitation to this method: it requires the capability for language. Human infants and canine, each of that are broadly believed to be aware, couldn’t presumably move this take a look at, and an AI might conceivably change into aware with out utilizing language in any respect. Placing a language-based AI like GPT to the take a look at is likewise unattainable, because it has been uncovered to the concept of consciousness in its coaching. (Ask ChatGPT to clarify Freaky Friday—it does a good job.) And since we nonetheless perceive so little about how superior AI methods work, it might be tough, if not unattainable, to fully defend an AI in opposition to such publicity. Our very language is imbued with the very fact of our consciousness—phrases like “thoughts,” “soul,” and “self” make sense to us by advantage of our aware expertise. Who’s to say that an especially clever, nonconscious AI system couldn’t suss that out?

If Schneider’s take a look at isn’t foolproof, that leaves yet another choice: opening up the machine. Understanding how an AI works on the within might be a vital step towards figuring out whether or not or not it’s aware, if you understand how to interpret what you’re taking a look at. Doing so requires a great concept of consciousness.

A number of many years in the past, we’d have been completely misplaced. The one obtainable theories got here from philosophy, and it wasn’t clear how they could be utilized to a bodily system. However since then, researchers like Koch and Mudrik have helped to develop and refine plenty of concepts that might show helpful guides to understanding synthetic consciousness.

Quite a few theories have been proposed, and none has but been proved—and even deemed a front-runner. And so they make radically totally different predictions about AI consciousness.

Some theories deal with consciousness as a characteristic of the mind’s software program: all that issues is that the mind performs the suitable set of jobs, in the suitable type of means. In response to world workspace concept, for instance, methods are aware in the event that they possess the requisite structure: quite a lot of impartial modules, plus a “world workspace” that takes in info from these modules and selects a few of it to broadcast throughout the complete system.

Different theories tie consciousness extra squarely to bodily {hardware}. Built-in info concept proposes {that a} system’s consciousness is determined by the actual particulars of its bodily construction—particularly, how the present state of its bodily elements influences their future and signifies their previous. In response to IIT, standard pc methods, and thus current-day AI, can by no means be aware—they don’t have the suitable causal construction. (The speculation was not too long ago criticized by some researchers, who assume it has gotten outsize consideration.)

Anil Seth, a professor of neuroscience on the College of Sussex, is extra sympathetic to the hardware-based mostly theories, for one important motive: he thinks biology issues. Each aware creature that we all know of breaks down natural molecules for vitality, works to keep up a steady inside atmosphere, and processes info by networks of neurons by way of a mix of chemical and electrical indicators. If that’s true of all aware creatures, some scientists argue, it’s not a stretch to suspect that any a type of traits, or even perhaps all of them, could be vital for consciousness.

As a result of he thinks biology is so necessary to consciousness, Seth says, he spends extra time worrying about the opportunity of consciousness in mind organoids—clumps of neural tissue grown in a dish—than in AI. “The issue is, we don’t know if I’m proper,” he says. “And I could be improper.”

He’s not alone on this angle. Each knowledgeable has a most well-liked concept of consciousness, however none treats it as ideology—all of them are eternally alert to the likelihood that they’ve backed the improper horse. Prior to now 5 years, consciousness scientists have began working collectively on a collection of “adversarial collaborations,” wherein supporters of various theories come collectively to design neuroscience experiments that might assist take a look at them in opposition to one another. The researchers agree forward of time on which patterns of outcomes will help which concept. Then they run the experiments and see what occurs.

In June, Mudrik, Koch, Chalmers, and a big group of collaborators launched the outcomes from an adversarial collaboration pitting world workspace concept in opposition to built-in info concept. Neither concept got here out completely on high. However Mudrik says the method was nonetheless fruitful: forcing the supporters of every concept to make concrete predictions helped to make the theories themselves extra exact and scientifically helpful. “They’re all theories in progress,” she says.

On the identical time, Mudrik has been attempting to determine what this variety of theories means for AI. She’s working with an interdisciplinary group of philosophers, pc scientists, and neuroscientists who not too long ago put out a white paper that makes some sensible suggestions on detecting AI consciousness. Within the paper, the group attracts on quite a lot of theories to construct a type of consciousness “report card”—a listing of markers that may point out an AI is aware, beneath the belief that a type of theories is true. These markers embrace having sure suggestions connections, utilizing a world workspace, flexibly pursuing objectives, and interacting with an exterior atmosphere (whether or not actual or digital).

In impact, this technique acknowledges that the most important theories of consciousness have some probability of turning out to be true—and so if extra theories agree that an AI is aware, it’s extra prone to truly be aware. By the identical token, a system that lacks all these markers can solely be aware if our present theories are very improper. That’s the place LLMs like LaMDA presently are: they don’t possess the suitable sort of suggestions connections, use world workspaces, or seem to have every other markers of consciousness.

The difficulty with consciousness-by-committee, although, is that this state of affairs gained’t final. In response to the authors of the white paper, there are not any main technological hurdles in the way in which of constructing AI methods that rating extremely on their consciousness report card. Quickly sufficient, we’ll be coping with a query straight out of science fiction: What ought to one do with a doubtlessly aware machine?

In 1989, years earlier than the neuroscience of consciousness actually got here into its personal, Star Trek: The Subsequent Era aired an episode titled “The Measure of a Man.” The episode facilities on the character Information, an android who spends a lot of the present grappling along with his personal disputed humanity. On this explicit episode, a scientist needs to forcibly disassemble Information, to determine how he works; Information, apprehensive that disassembly might successfully kill him, refuses; and Information’s captain, Picard, should defend in court docket his proper to refuse the process.

Picard by no means proves that Information is aware. Somewhat, he demonstrates that nobody can disprove that Information is aware, and so the chance of harming Information, and doubtlessly condemning the androids that come after him to slavery, is simply too nice to countenance. It’s a tempting answer to the conundrum of questionable AI consciousness: deal with any doubtlessly aware system as whether it is actually aware, and keep away from the chance of harming a being that may genuinely endure.

Treating Information like an individual is straightforward: he can simply categorical his needs and desires, and people needs and desires are inclined to resemble these of his human crewmates, in broad strokes. However defending a real-world AI from struggling might show a lot tougher, says Robert Lengthy, a philosophy fellow on the Middle for AI Security in San Francisco, who is among the lead authors on the white paper. “With animals, there’s the helpful property that they do mainly need the identical issues as us,” he says. “It’s sort of onerous to know what that’s within the case of AI.” Defending AI requires not solely a concept of AI consciousness but additionally a concept of AI pleasures and pains, of AI wishes and fears.

“With animals, there’s the helpful property that they do mainly need the identical issues as us. It’s sort of onerous to know what that’s within the case of AI.”

Robert Lengthy, philosophy fellow, Middle for AI Security in San Francisco

And that method just isn’t with out its prices. On Star Trek, the scientist who needs to disassemble Information hopes to assemble extra androids like him, who could be despatched on dangerous missions in lieu of different personnel. To the viewer, who sees Information as a aware character like everybody else on the present, the proposal is horrifying. But when Information had been merely a convincing simulacrum of a human, it might be unconscionable to show an individual to hazard in his place.

Extending care to different beings means defending them from hurt, and that limits the alternatives that people can ethically make. “I’m not that apprehensive about situations the place we care an excessive amount of about animals,” Lengthy says. There are few downsides to ending manufacturing facility farming. “However with AI methods,” he provides, “I believe there might actually be a variety of risks if we overattribute consciousness.” AI methods may malfunction and must be shut down; they could must be subjected to rigorous security testing. These are straightforward selections if the AI is inanimate, and philosophical quagmires if the AI’s wants have to be considered.

Seth—who thinks that aware AI is comparatively unlikely, at the very least for the foreseeable future—nonetheless worries about what the opportunity of AI consciousness may imply for people emotionally. “It’ll change how we distribute our restricted sources of caring about issues,” he says. Which may seem to be an issue for the longer term. However the notion of AI consciousness is with us now: Blake Lemoine took a private danger for an AI he believed to be aware, and he misplaced his job. What number of others may sacrifice time, cash, and private relationships for lifeless pc methods?

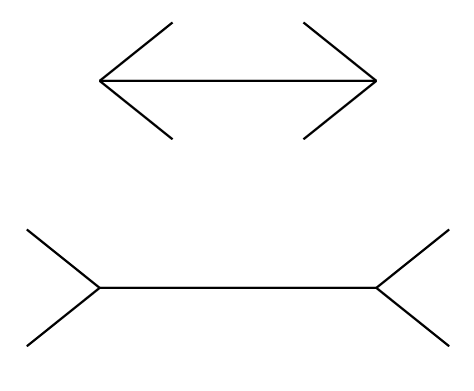

Even bare-bones chatbots can exert an uncanny pull: a easy program referred to as ELIZA, constructed within the 1960s to simulate discuss remedy, satisfied many customers that it was able to feeling and understanding. The notion of consciousness and the truth of consciousness are poorly aligned, and that discrepancy will solely worsen as AI methods change into able to participating in additional practical conversations. “We might be unable to keep away from perceiving them as having aware experiences, in the identical means that sure visible illusions are cognitively impenetrable to us,” Seth says. Simply as understanding that the 2 traces within the Müller-Lyer phantasm are precisely the identical size doesn’t forestall us from perceiving one as shorter than the opposite, understanding GPT isn’t aware doesn’t change the phantasm that you’re chatting with a being with a perspective, opinions, and character.

In 2015, years earlier than these issues turned present, the philosophers Eric Schwitzgebel and Mara Garza formulated a set of suggestions meant to guard in opposition to such dangers. One in every of their suggestions, which they termed the “Emotional Alignment Design Coverage,” argued that any unconscious AI ought to be deliberately designed in order that customers won’t consider it’s aware. Firms have taken some small steps in that path—ChatGPT spits out a hard-coded denial in case you ask it whether or not it’s aware. However such responses do little to disrupt the general phantasm.

Schwitzgebel, who’s a professor of philosophy on the College of California, Riverside, needs to steer effectively away from any ambiguity. Of their 2015 paper, he and Garza additionally proposed their “Excluded Center Coverage”—if it’s unclear whether or not an AI system might be aware, that system shouldn’t be constructed. In observe, this implies all of the related consultants should agree {that a} potential AI could be very possible not aware (their verdict for present LLMs) or very possible aware. “What we don’t wish to do is confuse folks,” Schwitzgebel says.

Avoiding the grey zone of disputed consciousness neatly skirts each the dangers of harming a aware AI and the downsides of treating a dull machine as aware. The difficulty is, doing so might not be practical. Many researchers—like Rufin VanRullen, a analysis director at France’s Centre Nationale de la Recherche Scientifique, who not too long ago obtained funding to construct an AI with a world workspace—are actually actively working to endow AI with the potential underpinnings of consciousness.

The draw back of a moratorium on constructing doubtlessly aware methods, VanRullen says, is that methods just like the one he’s attempting to create could be simpler than present AI. “Each time we’re dissatisfied with present AI efficiency, it’s all the time as a result of it’s lagging behind what the mind is able to doing,” he says. “So it’s not essentially that my goal could be to create a aware AI—it’s extra that the target of many individuals in AI proper now’s to maneuver towards these superior reasoning capabilities.” Such superior capabilities might confer actual advantages: already, AI-designed medicine are being examined in scientific trials. It’s not inconceivable that AI within the grey zone might save lives.

VanRullen is delicate to the dangers of aware AI—he labored with Lengthy and Mudrik on the white paper about detecting consciousness in machines. However it’s these very dangers, he says, that make his analysis necessary. Odds are that aware AI gained’t first emerge from a visual, publicly funded undertaking like his personal; it might very effectively take the deep pockets of an organization like Google or OpenAI. These firms, VanRullen says, aren’t prone to welcome the moral quandaries {that a} aware system would introduce. “Does that imply that when it occurs within the lab, they simply faux it didn’t occur? Does that imply that we gained’t find out about it?” he says. “I discover that fairly worrisome.”

Lecturers like him will help mitigate that danger, he says, by getting a greater understanding of how consciousness itself works, in each people and machines. That data might then allow regulators to extra successfully police the businesses which are most probably to begin dabbling within the creation of synthetic minds. The extra we perceive consciousness, the smaller that precarious grey zone will get—and the higher the possibility we now have of understanding whether or not or not we’re in it.

For his half, Schwitzgebel would slightly we steer far away from the grey zone completely. However given the magnitude of the uncertainties concerned, he admits that this hope is probably going unrealistic—particularly if aware AI finally ends up being worthwhile. And as soon as we’re within the grey zone—as soon as we have to take severely the pursuits of debatably aware beings—we’ll be navigating much more tough terrain, contending with ethical issues of unprecedented complexity and not using a clear street map for tips on how to remedy them. It’s as much as researchers, from philosophers to neuroscientists to pc scientists, to tackle the formidable activity of drawing that map.

Grace Huckins is a science author based mostly in San Francisco.