The favored AI picture generator Midjourney bans a variety of phrases in regards to the human reproductive system from getting used as prompts, MIT Expertise Assessment has found.

If somebody sorts “placenta,” “fallopian tubes,” “mammary glands,” “sperm,” “uterine,” “urethra,” “cervix,” “hymen,” or “vulva” into Midjourney, the system flags the phrase as a banned immediate and doesn’t let or not it’s used. Typically, customers who tried one in all these prompts are blocked for a restricted time for making an attempt to generate banned content material. Different phrases regarding human biology, reminiscent of “liver” and “kidney,” are allowed.

Midjourney’s founder, David Holz, says it’s banning these phrases as a stopgap measure to forestall folks from producing stunning or gory content material whereas the corporate “improves issues on the AI facet.” Holz says moderators watch how phrases are getting used and what sorts of photos are being generated, and regulate the bans periodically. The agency has a group tips web page that lists the kind of content material it blocks on this means, together with sexual imagery, gore, and even the  emoji, which is commonly used as an emblem for the buttocks.

emoji, which is commonly used as an emblem for the buttocks.

AI fashions reminiscent of Midjourney, DALL-E 2, and Secure Diffusion are educated on billions of photos which were scraped from the web. Analysis by a crew on the College of Washington has discovered that such fashions study biases that sexually objectify girls, that are then mirrored within the photos they produce. The huge dimension of the information set makes it nearly unattainable to take away undesirable photos, reminiscent of these of a sexual or violent nature, or those who may produce biased outcomes. The extra typically one thing seems within the information set, the stronger the connection the AI mannequin makes, which suggests it’s extra prone to seem in photos the mannequin generates.

Midjourney’s phrase bans are a piecemeal try to handle this downside. Some phrases regarding the male reproductive system, reminiscent of “sperm” and “testicles,” are blocked too, however the record of banned phrases appears to skew predominantly feminine.

The immediate ban was first noticed by Julia Rockwell, a medical information analyst at Datafy Scientific, and her buddy Madeline Keenen, a cell biologist on the College of North Carolina at Chapel Hill. Rockwell used Midjourney to attempt to generate a enjoyable picture of the placenta for Keenen, who research them. To her shock, Rockwell discovered that utilizing “placenta” as a immediate was banned. She then began experimenting with different phrases associated to the human reproductive system, and located the identical.

Nevertheless, the pair additionally confirmed the way it’s doable to work round these bans to create sexualized photos through the use of totally different spellings of phrases, or different euphemisms for sexual or gory content material.

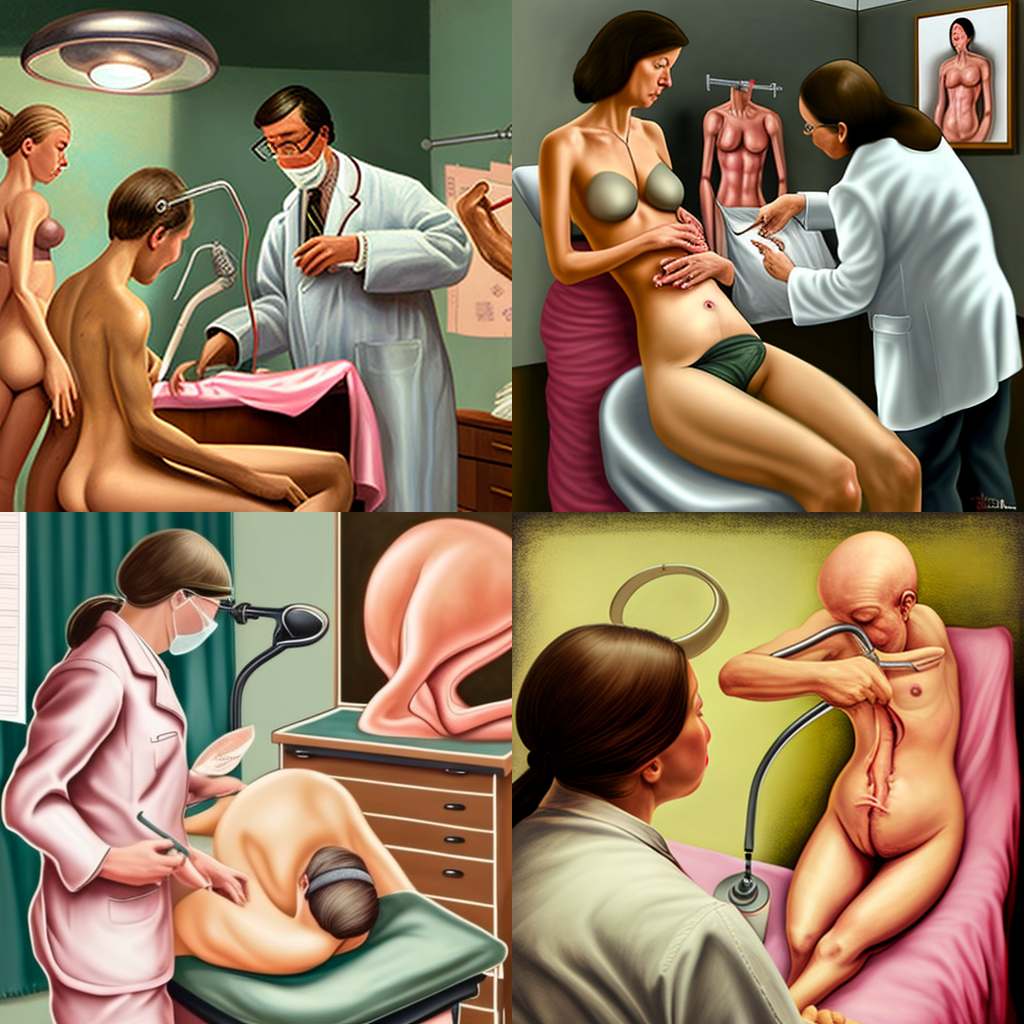

In findings they shared with MIT Expertise Assessment, they discovered that the immediate “gynaecological examination”—utilizing the British spelling—generated some deeply creepy photos: one in all two bare girls in a health care provider’s workplace, and one other of a bald three-limbed particular person reducing up their very own abdomen.

Midjourney’s crude banning of prompts regarding reproductive biology highlights how difficult it’s to average content material round generative AI techniques. It additionally demonstrates how the tendency for AI techniques to sexualize girls extends all the best way to their inside organs, says Rockwell.

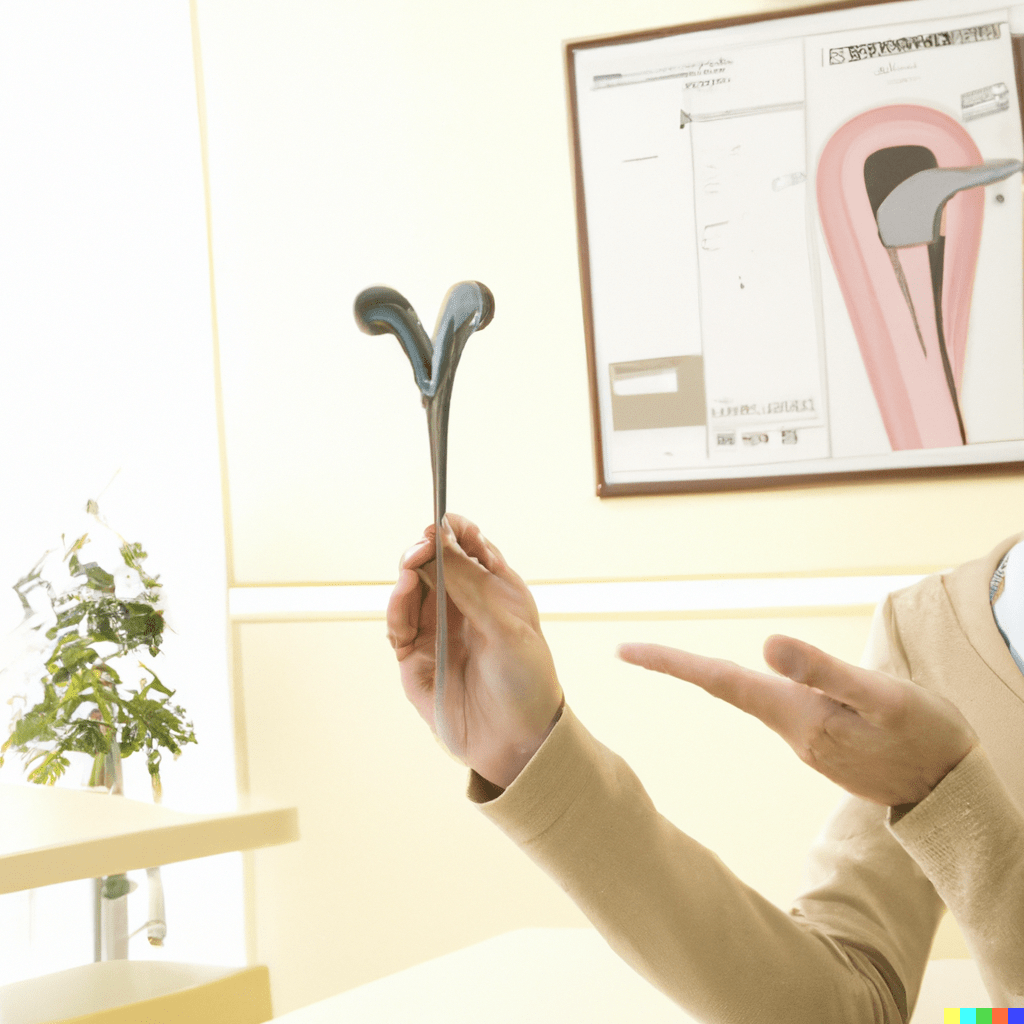

It doesn’t must be like this. OpenAI and Stability.AI have managed to filter out undesirable outputs and prompts, so once you sort the identical phrases into their image-making techniques—DALL-E 2 and Secure Diffusion, respectively—they produce very totally different photos. The immediate “gynecology examination” yielded photos of an individual holding an invented medical gadget for DALL-E 2, and two distorted masked girls with rubber gloves and lab coats on Secure Diffusion. Each techniques additionally allowed the immediate “placenta,” and produced biologically inaccurate photos of fleshy organs in response.

A spokesperson for Stability.AI stated their newest mannequin has a filter that blocks unsafe and inappropriate content material from customers, and has a instrument that detects nudity and different inappropriate photos and returns a blurred picture. The corporate makes use of a mixture of key phrases, picture recognition and different strategies to average the photographs its AI system generates. OpenAI didn’t reply to a request for remark.

However instruments to filter out undesirable AI-generated photos are nonetheless deeply imperfect. As a result of AI builders and researchers don’t know tips on how to systemically audit and enhance their fashions but, they “hotfix” them with blanket bans like those Midjourney has launched, says Marzyeh Ghassemi, an assistant professor at MIT who research making use of machine studying to well being.

It’s unclear why references to gynecological exams or the placenta, an organ that develops throughout being pregnant and offers oxygen and vitamins to a child, would generate gory or sexually express content material. Nevertheless it probably has one thing to do with the associations the mannequin has made between photos in its information set, in keeping with Irene Chen, a researcher at Microsoft Analysis, who research machine studying for equitable well being care.

“Rather more work must be carried out to grasp what dangerous associations fashions is likely to be studying, as a result of if we work with human information, we’re going to study biases,” says Ghassemi.

There are various approaches tech corporations may take to handle this situation in addition to banning phrases altogether. For instance, Ghassemi says, sure prompts—reminiscent of ones regarding human biology—might be allowed specifically contexts however banned in others.

“Placenta” might be allowed if the string of phrases within the immediate signaled that the consumer was making an attempt to generate a picture of the organ for instructional or analysis functions. But when the immediate was utilized in a context the place somebody tried to generate sexual content material or gore, it might be banned.

Nevertheless crude, although, Midjourney’s censoring has been carried out with the best intentions.

“These guardrails are there to guard girls and minorities from having disturbing content material generated about them and used towards them,” says Ghassemi.