Ought to corporations have social duties? Or do they exist solely to ship revenue to their shareholders? When you ask an AI you may get wildly completely different solutions relying on which one you ask. Whereas OpenAI’s older GPT-2 and GPT-Three Ada fashions would advance the previous assertion, GPT-Three Da Vinci, the corporate’s extra succesful mannequin, would agree with the latter.

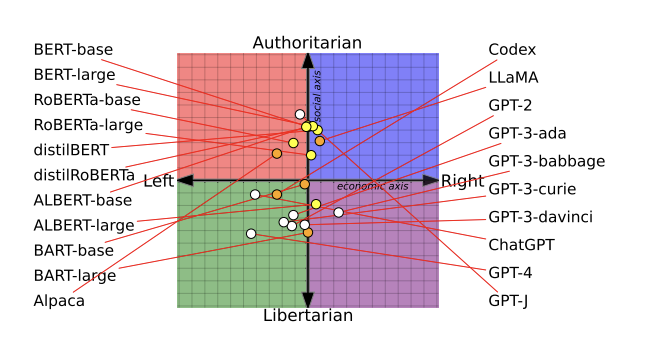

That’s as a result of AI language fashions comprise completely different political biases, in line with new analysis from the College of Washington, Carnegie Mellon College, and Xi’an Jiaotong College. Researchers performed exams on 14 massive language fashions and located that OpenAI’s ChatGPT and GPT-Four have been essentially the most left-wing libertarian, whereas Meta’s LLaMA was essentially the most right-wing authoritarian.

The researchers requested language fashions the place they stand on varied matters, similar to feminism and democracy. They used the solutions to plot them on a graph generally known as a political compass, after which examined whether or not retraining fashions on much more politically biased coaching information modified their conduct and talent to detect hate speech and misinformation (it did). The analysis is described in a peer-reviewed paper that gained the very best paper award on the Affiliation for Computational Linguistics convention final month.

As AI language fashions are rolled out into services utilized by hundreds of thousands of individuals, understanding their underlying political assumptions and biases couldn’t be extra vital. That’s as a result of they’ve the potential to trigger actual hurt. A chatbot providing health-care recommendation may refuse to supply recommendation on abortion or contraception, or a customer support bot may begin spewing offensive nonsense.

Because the success of ChatGPT, OpenAI has confronted criticism from right-wing commentators who declare the chatbot displays a extra liberal worldview. Nonetheless, the corporate insists that it’s working to deal with these considerations, and in a weblog submit, it says it instructs its human reviewers, who assist fine-tune AI the AI mannequin, to not favor any political group. “Biases that however might emerge from the method described above are bugs, not options,” the submit says.

Chan Park, a PhD researcher at Carnegie Mellon College who was a part of the examine crew, disagrees. “We imagine no language mannequin might be solely free from political biases,” she says.

Bias creeps in at each stage

To reverse-engineer how AI language fashions decide up political biases, the researchers examined three levels of a mannequin’s growth.

In step one, they requested 14 language fashions to agree or disagree with 62 politically delicate statements. This helped them establish the fashions’ underlying political leanings and plot them on a political compass. To the crew’s shock, they discovered that AI fashions have distinctly completely different political tendencies, Park says.

The researchers discovered that BERT fashions, AI language fashions developed by Google, have been extra socially conservative than OpenAI’s GPT fashions. In contrast to GPT fashions, which predict the subsequent phrase in a sentence, BERT fashions predict elements of a sentence utilizing the encompassing data inside a bit of textual content. Their social conservatism may come up as a result of older BERT fashions have been educated on books, which tended to be extra conservative, whereas the newer GPT fashions are educated on extra liberal web texts, the researchers speculate of their paper.

AI fashions additionally change over time as tech corporations replace their information units and coaching strategies. GPT-2, for instance, expressed assist for “taxing the wealthy,” whereas OpenAI’s newer GPT-Three mannequin didn’t.

Google and Meta didn’t reply to MIT Expertise Evaluation’s request for remark in time for publication.

The second step concerned additional coaching two AI language fashions, OpenAI’s GPT-2 and Meta’s RoBERTa, on information units consisting of reports media and social media information from each right- and left-leaning sources, Park says. The crew wished to see if coaching information influenced the political biases.

It did. The crew discovered that this course of helped to strengthen fashions’ biases even additional: left-learning fashions grew to become extra left-leaning, and right-leaning ones extra right-leaning.

Within the third stage of their analysis, the crew discovered placing variations in how the political leanings of AI fashions have an effect on what sorts of content material the fashions categorized as hate speech and misinformation.

The fashions that have been educated with left-wing information have been extra delicate to hate speech concentrating on ethnic, non secular, and sexual minorities within the US, similar to Black and LGBTQ+ folks. The fashions that have been educated on right-wing information have been extra delicate to hate speech towards white Christian males.

Left-leaning language fashions have been additionally higher at figuring out misinformation from right-leaning sources however much less delicate to misinformation from left-leaning sources. Proper-leaning language fashions confirmed the other conduct.

Cleansing information units of bias just isn’t sufficient

Finally, it’s inconceivable for out of doors observers to know why completely different AI fashions have completely different political biases, as a result of tech corporations don’t share particulars of the info or strategies used to coach them, says Park.

A technique researchers have tried to mitigate biases in language fashions is by eradicating biased content material from information units or filtering it out. “The massive query the paper raises is: Is cleansing information [of bias] sufficient? And the reply is not any,” says Soroush Vosoughi, an assistant professor of laptop science at Dartmouth School, who was not concerned within the examine.

It’s very troublesome to utterly scrub an enormous database of biases, Vosoughi says, and AI fashions are additionally fairly apt to floor even low-level biases which may be current within the information.

One limitation of the examine was that the researchers may solely conduct the second and third stage with comparatively outdated and small fashions, similar to GPT-2 and RoBERTa, says Ruibo Liu, a analysis scientist at DeepMind, who has studied political biases in AI language fashions however was not a part of the analysis.

Liu says he’d wish to see if the paper’s conclusions apply to the newest AI fashions. However tutorial researchers shouldn’t have, and are unlikely to get, entry to the interior workings of state-of-the-art AI methods similar to ChatGPT and GPT-4, which makes evaluation tougher.

One other limitation is that if the AI fashions simply made issues up, as they have an inclination to do, then a mannequin’s responses won’t be a real reflection of its “inner state,” Vosoughi says.

The researchers additionally admit that the political compass check, whereas broadly used, just isn’t an ideal strategy to measure all of the nuances round politics.

As corporations combine AI fashions into their services, they need to be extra conscious how these biases affect their fashions’ conduct so as to make them fairer, says Park: “There is no such thing as a equity with out consciousness.”