In center faculty and highschool, Nora Tan downloaded the large three of social media. It was solely pure. “I grew up within the age when social media was actually taking off,” says Tan, a Seattle-based product supervisor. “I created a Fb account in 2009, an Instagram account in 2010, a Twitter account after that once I was in highschool.”

By the point she reached school, Tan was questioning her resolution. “I considered how content material must be moderated and the way it was being utilized in political campaigns and to advance agendas,” she says. Troubled by what she discovered, Tan deleted Twitter, used Instagram solely to comply with nature accounts and shut associates, and downloaded a Chrome extension in order that when she typed in Fb on her search bar, she was solely served notifications from her associates.

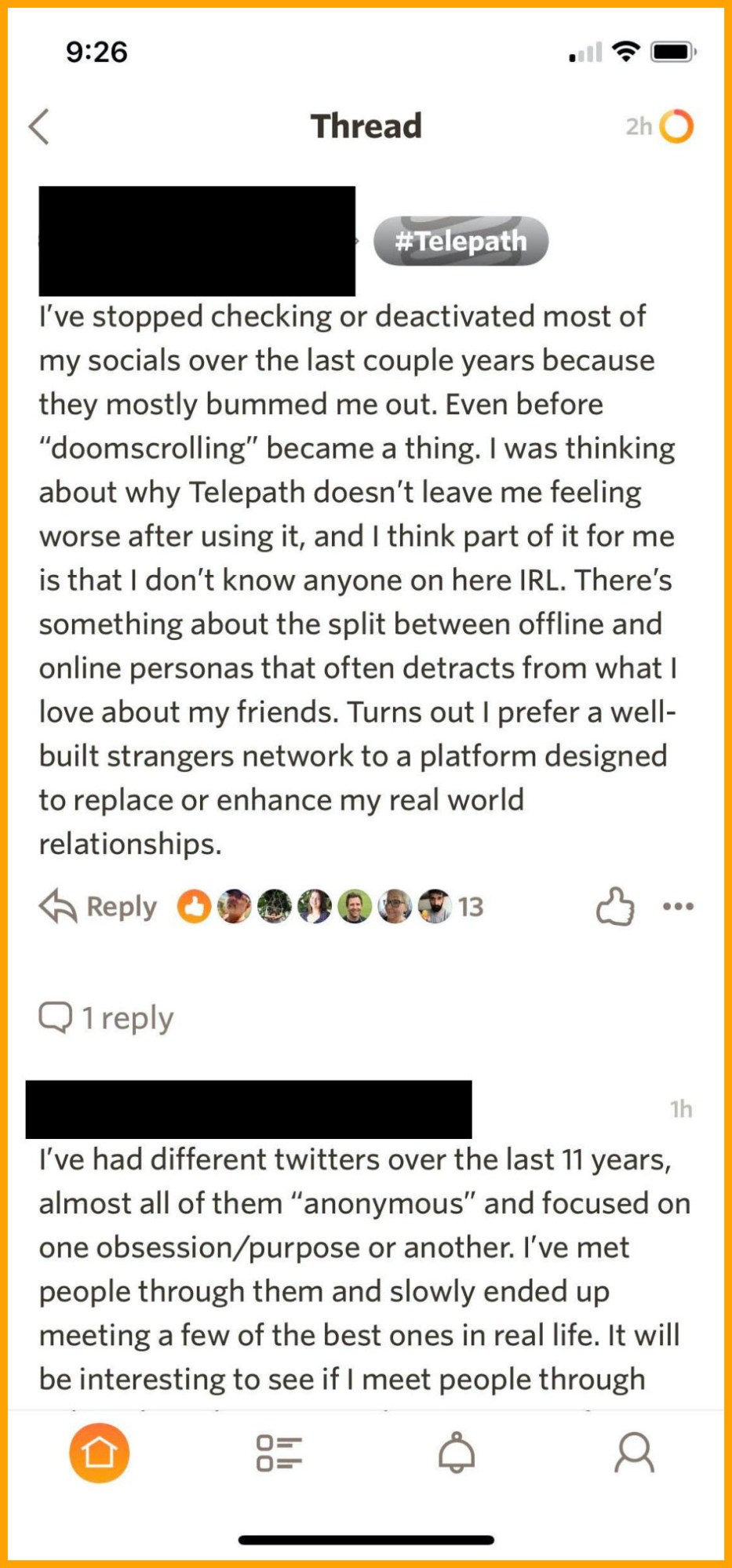

So she was “fairly cynical” when a pal invited her to be a non-public beta tester for a brand new social-media platform, Telepath, in March 2019. She was significantly skeptical as a lady of shade in tech. Eighteen months on, it’s now Tan’s solely type of social media.

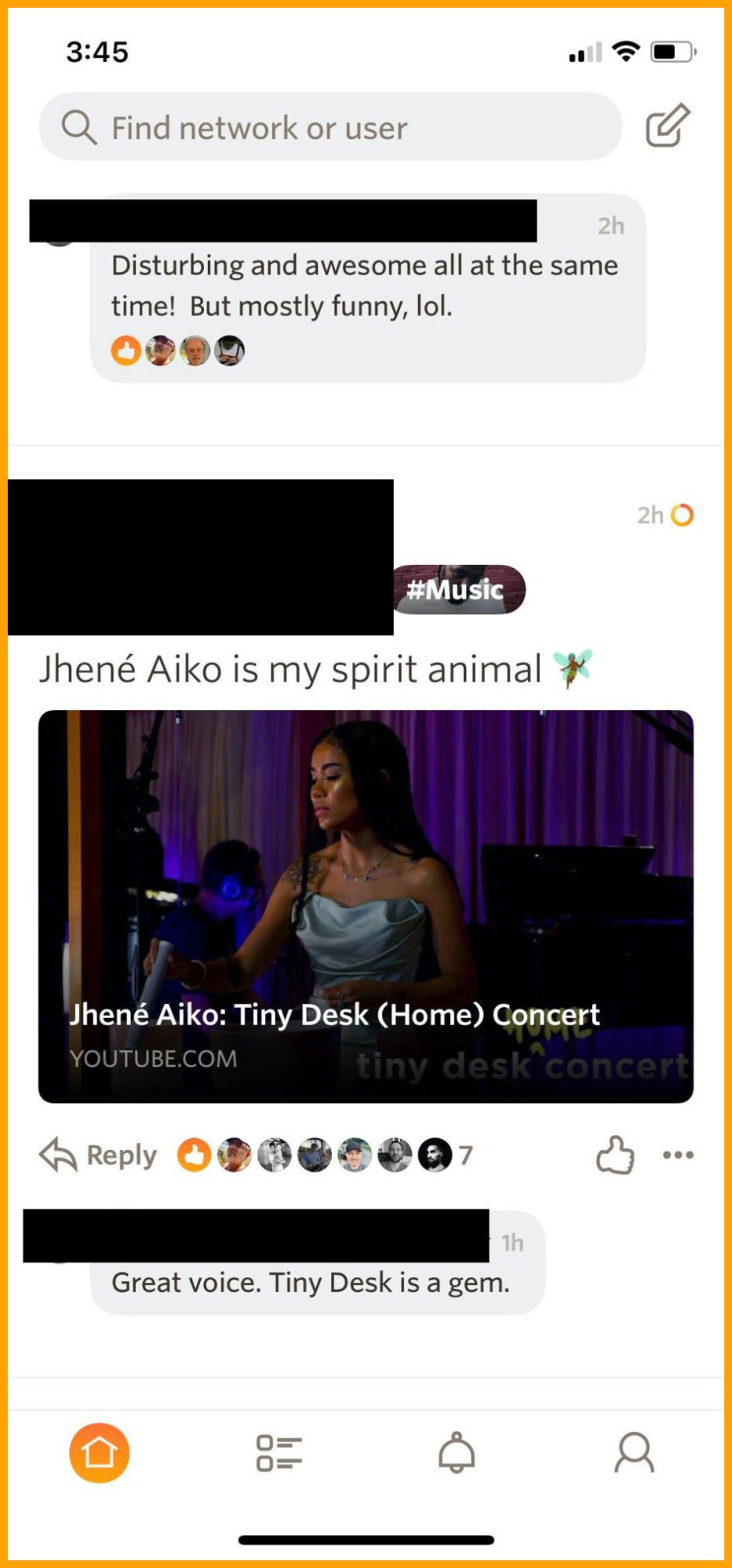

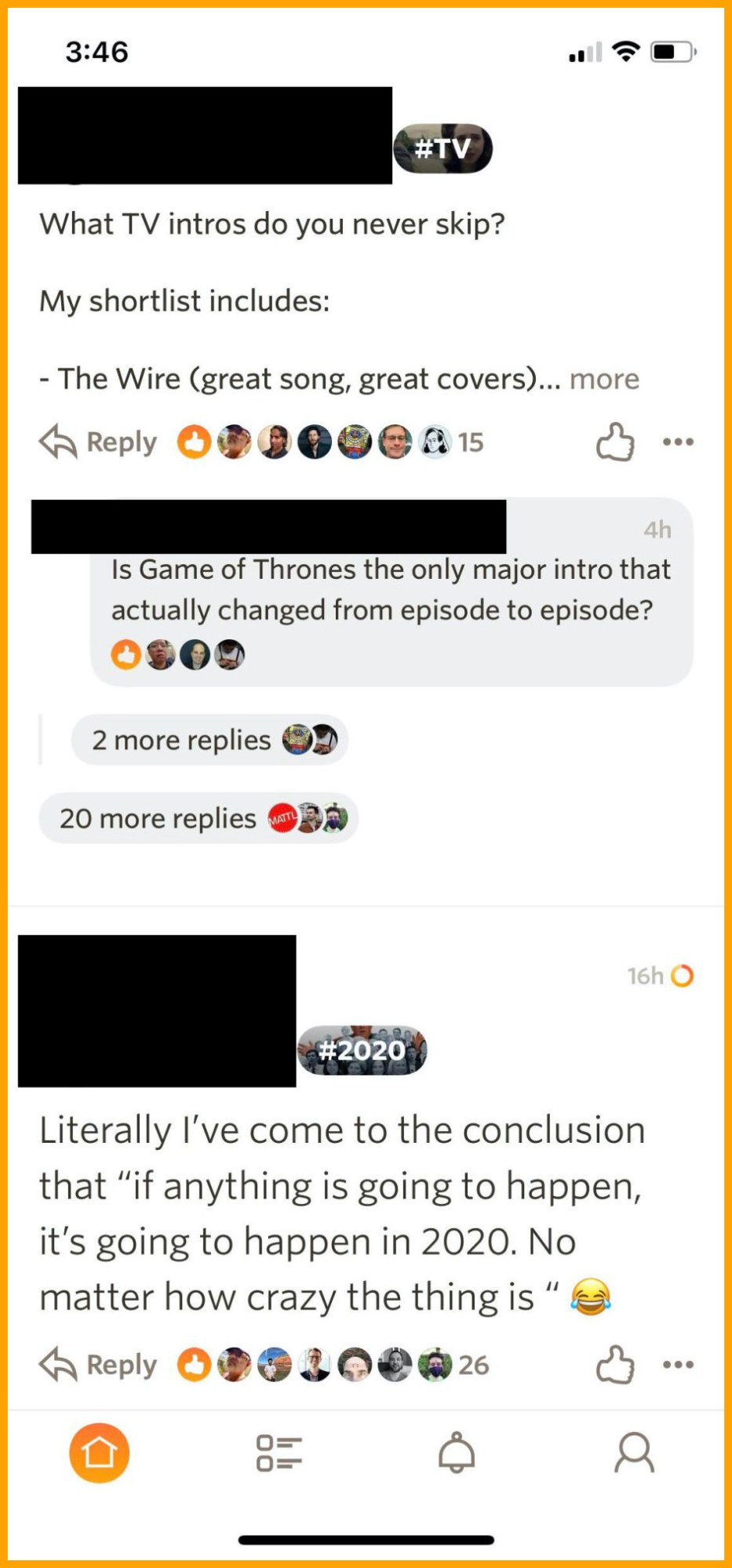

Telepath was cofounded by former Quora head Marc Bodnick, and it exhibits—its format feels just like Quora’s in some methods. The invite-only app permits customers to comply with folks or matters. Threads mix the urgency of Twitter with the ephemerality of Snapchat (posts disappear after 30 days).

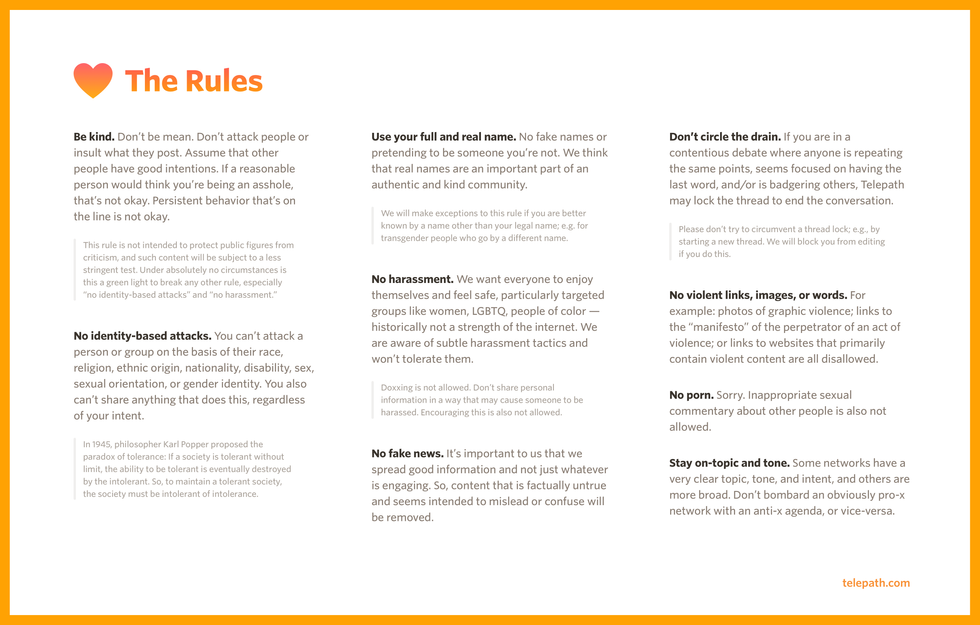

This isn’t significantly exceptional, however Telepath’s massive promoting level is: an in-house content material moderation crew enforces kindness, and customers are required to show their actual names.

Telepath’s identify coverage is supposed to ensure the content material moderation crew can totally give attention to recognizing abuse slightly than taking part in whack-a-mole with burner accounts. It’s additionally a approach to humanize dialog. “We require distinctive phone numbers and verification so it will probably’t be a throwaway quantity on Google voice,” says Tatiana Estévez, the top of neighborhood and security. “A number of sock-puppet accounts are the place you get among the nastiest stuff on different platforms.”

The coverage has already invited some criticism from onlookers who really feel it’d endanger girls and marginalized communities.

“There’s a broadly held perception that if folks use their authorized names they’ll behave higher in social environments as a result of different folks can determine them and there may be social penalties for his or her actions,” says J. Nathan Matias, a professor and founding father of the Residents and Expertise Lab at Cornell College. “Whereas there was some early proof for this within the 1980s, that proof wasn’t with a various group of individuals, and it didn’t account for what we now see the web has change into.”

In truth, many individuals who use pseudonyms are from extra marginalized or weak teams and accomplish that to maintain themselves secure from on-line harassment and doxxing.

Doxxing—utilizing figuring out info on-line to harass and threaten—is a life-threatening situation for a lot of marginalized folks and has soured them on social media like Fb and Twitter, which has come underneath hearth for not defending at-risk people (although each Twitter and Fb have tried to make amends of late by eradicating tweets selling misinformation, for instance). Estévez says that whereas she’s “very empathetic about this” and that trans folks can determine themselves by their chosen identify and pronouns, phone-name verification and the app’s invite-only construction have been needed to stop abusive conduct.

Estévez, who spent years as a volunteer moderator at Quora, imported Quora’s neighborhood guideline of “Be Good, Be Respectful” to Telepath. “Folks reply properly to being handled properly,” she says. “Individuals are happier. Individuals are actually drawn to variety communities and locations the place they’ll have their say and never really feel ridiculed.”

Telepath’s crew has been very deliberate with its language about this idea. “We made the choice to make use of the phrase ‘kindness’ as an alternative of ‘civil,’” Bodnick says. “Civil implies a rule you may get to the sting of and never break, such as you have been ‘simply being curious’ or ‘simply asking questions.’ We predict kindness is an effective method of describing good intent, giving one another the advantage of the doubt, not participating in private assaults. We hope it’s the energy to make these assessments that pulls folks.”

Traditionally, platforms have been reluctant to implement primary person security, not to mention kindness, says Danielle Citron, a legislation professor at Boston College Legislation Faculty who has written about content material moderation and suggested social-media platforms. “Niceness is just not a foul thought,” Citron says.

It’s additionally extraordinarily obscure and subjective, although, particularly when defending some folks can imply criticizing others. Questioning a sure perspective—even in a method that appears vital or unkind—can generally be needed. Those that commit microaggressions must be advised what’s unsuitable with their actions. Somebody repeating a slur must be confronted and educated. In a risky US election yr that has seen a racial reckoning in contrast to something for the reason that 1970s and a once-in-a-generation pandemic made worse by misinformation, maybe being variety is not sufficient.

How will Telepath thread this needle? That shall be all the way down to the in-house content material moderating crew, whose job it will likely be to police “kindness” on the platform.

It received’t essentially be straightforward. We’ve solely not too long ago begun understanding how traumatic content material moderation may be due to a collection of articles from Casey Newton, previously at The Verge, which uncovered the sweatshop-like circumstances confronting moderators who work on contract at minimal wage. Even when these staff have been paid higher, the content material many take care of is undeniably devastating. “Society continues to be determining find out how to make content material moderation manageable for people,” Matias says.

When requested about these points, Estévez emphasizes that at Telepath content material moderation shall be “holistic” and the work is supposed to be a profession. “We’re not seeking to have folks do that for a number of months and go,” she says. “I don’t have massive considerations.”

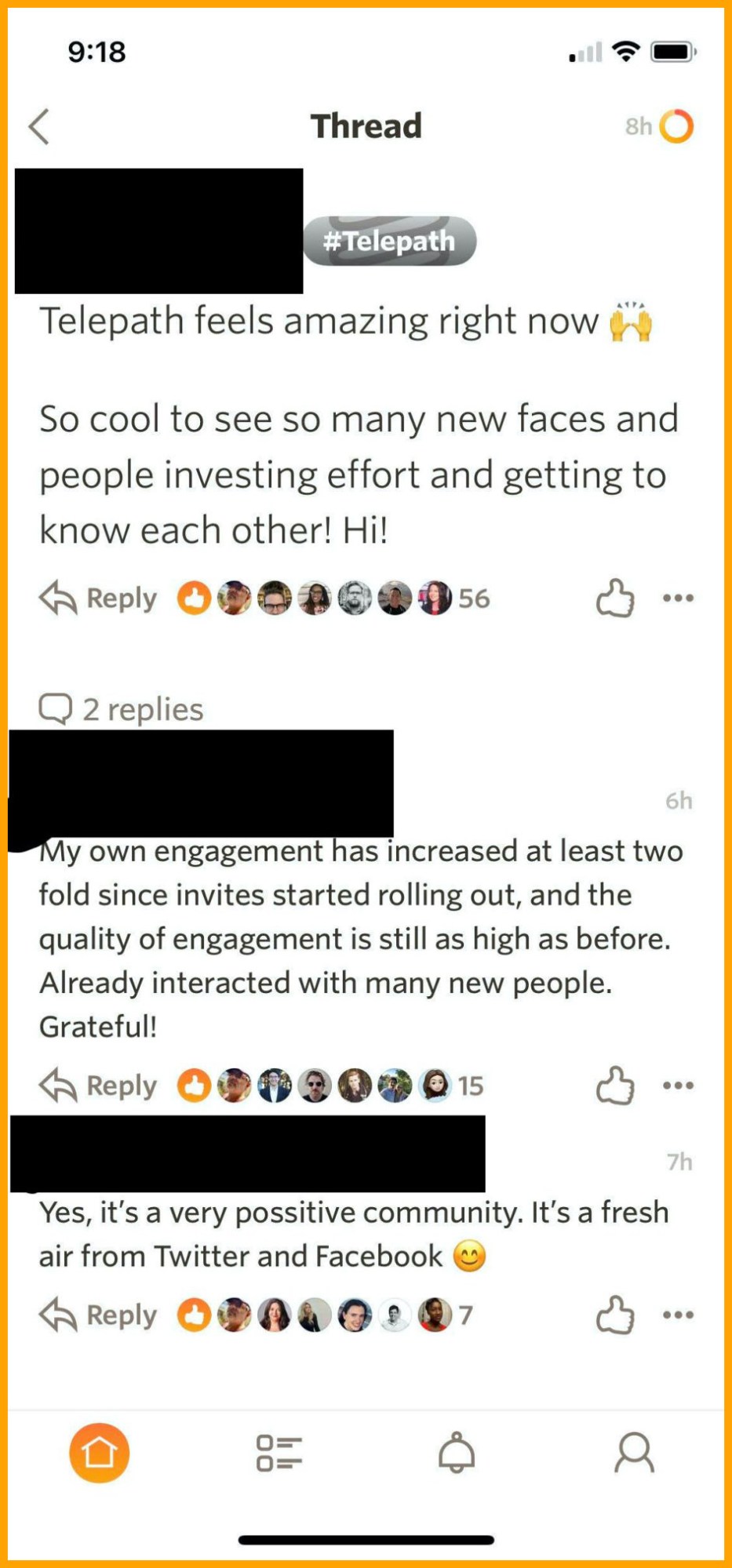

Telepath’s organizers consider that the invite-only mannequin will assist on this regard (the platform presently helps roughly 3,000 folks). “By stifling progress to some extent, we’re going to make it higher for ourselves,” says Estévez.

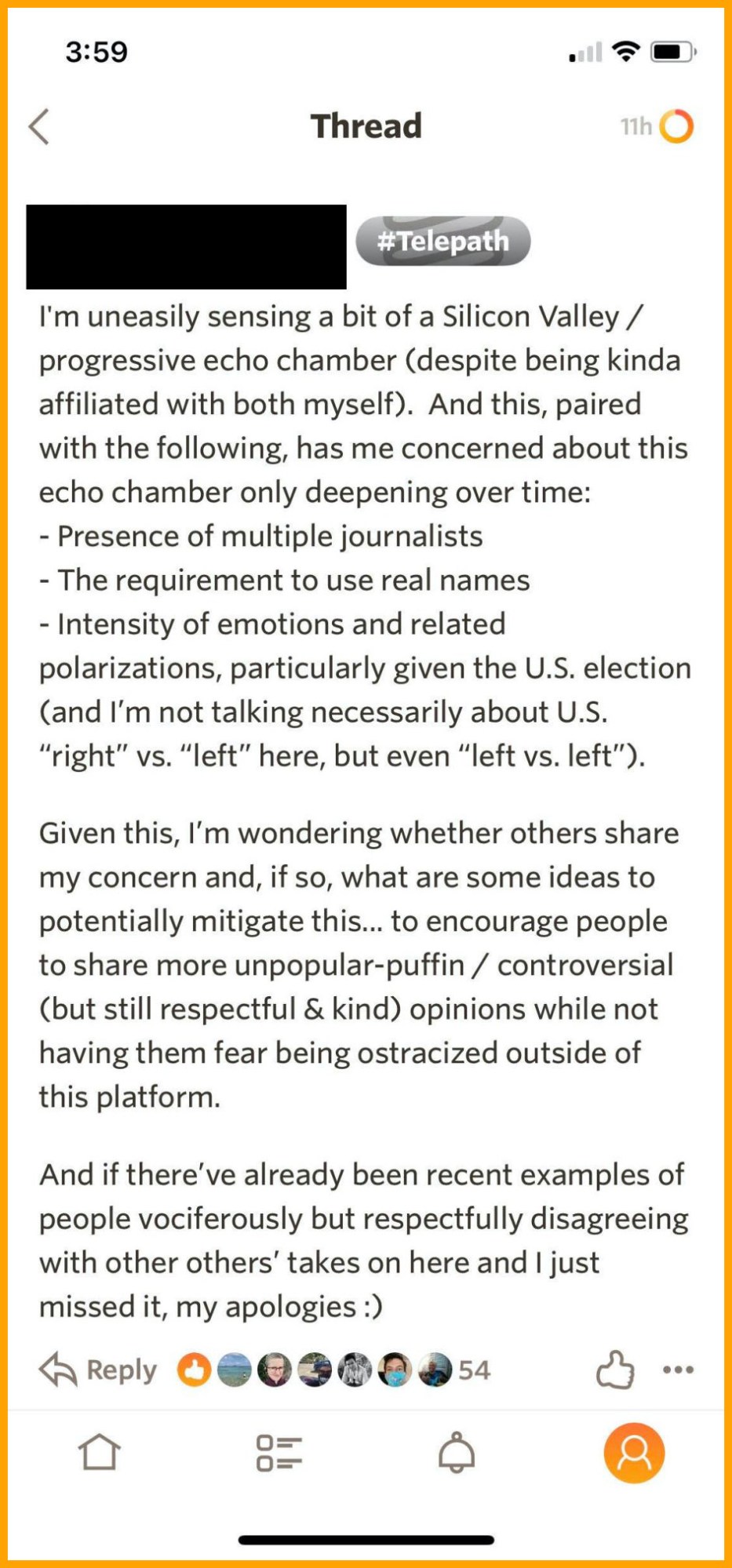

However the mannequin presents one other situation. “One of many pervasive issues that many social platforms which have launched within the US have had is an issue with variety,” Matias says. “If they begin with a bunch of customers that aren’t various, then cultures can construct up within the community which are unwelcoming and in some instances hostile to marginalized folks.”

That concern of a hostile in-group tradition is properly based. Clubhouse, an audio-first social-media app utilized by many with Silicon Valley ties, was launched to vital acclaim earlier this yr, solely to devolve into the kind of misogynistic vitriol that has seeped into each nook of the web. Simply final week, it got here underneath hearth for anti-Semitism.

To this point, Telepath has been dominated by Silicon Valley sorts, journalists, and others with spheres of affect exterior the app. It’s not a various crowd, and Estévez says the crew acknowledges that. “It’s not nearly inviting folks; it’s not nearly inviting girls and Black folks,” she says. “It’s in order that they [women and Black people] have a superb expertise, so that they see different girls and Black folks and will not be getting mansplained to or getting microaggressions.”

That could be a difficult steadiness. On the one hand, sustaining an invite-only neighborhood of like-minded members permits Telepath to regulate the variety of posts and members it should regulate. However that kind of atmosphere may also change into an echo chamber that doesn’t problem norms, defeating the aim of dialog within the first place and probably providing a hostile reception to outsiders.

Tan, the early adopter, says that the folks she’s interacting with definitely fall into a sort: they’re left-leaning and tech-y. “The primary individuals who have been utilizing it have been coming from Marc [Bodnick] or Richard [Henry]’s networks,” she says, referring to the cofounders. “It tends to be a whole lot of tech folks.” Tan says the app’s conversations are wide-ranging, although, and she or he has been “pleasantly shocked” on the depth of dialogue.

“Any social-media website may be an echo chamber, relying on who you comply with,” she provides.

Telepath is finally in a tug-of-war: Is it attainable to encourage lively-yet-decent debate on a platform with out seeing it devolve into harassment? Most customers assume that being on-line includes taking a certain quantity of abuse, significantly in the event you’re a lady or from a marginalized group. Ideally, that doesn’t must be the case.

“They should be dedicated, that this isn’t simply lip service about ‘being variety,’” Citron says. “Of us usually roll out merchandise in beta from after which take into consideration hurt, however then it’s too late. That’s sadly the story of the web.” To this point, however maybe it doesn’t must be.