Synthetic intelligence has introduced a giant increase in productiveness—to the legal underworld.

Generative AI gives a brand new, highly effective software equipment that enables malicious actors to work way more effectively and internationally than ever earlier than, says Vincenzo Ciancaglini, a senior menace researcher on the safety firm Development Micro.

Most criminals are “not dwelling in some darkish lair and plotting issues,” says Ciancaglini. “Most of them are common people that stick with it common actions that require productiveness as effectively.”

Final yr noticed the rise and fall of WormGPT, an AI language mannequin constructed on prime of an open-source mannequin and educated on malware-related knowledge, which was created to help hackers and had no moral guidelines or restrictions. However final summer season, its creators introduced they have been shutting the mannequin down after it began attracting media consideration. Since then, cybercriminals have principally stopped creating their very own AI fashions. As a substitute, they’re choosing tips with present instruments that work reliably.

That’s as a result of criminals need a straightforward life and fast features, Ciancaglini explains. For any new expertise to be definitely worth the unknown dangers related to adopting it—for instance, a better danger of getting caught—it needs to be higher and produce larger rewards than what they’re presently utilizing.

Listed below are 5 methods criminals are utilizing AI now.

Phishing

The largest use case for generative AI amongst criminals proper now’s phishing, which entails making an attempt to trick individuals into revealing delicate data that can be utilized for malicious functions, says Mislav Balunović, an AI safety researcher at ETH Zurich. Researchers have discovered that the rise of ChatGPT has been accompanied by an enormous spike within the variety of phishing emails.

Spam-generating providers, akin to GoMail Professional, have ChatGPT built-in into them, which permits legal customers to translate or enhance the messages despatched to victims, says Ciancaglini. OpenAI’s insurance policies limit individuals from utilizing their merchandise for unlawful actions, however that’s tough to police in apply, as a result of many innocent-sounding prompts might be used for malicious functions too, says Ciancaglini.

OpenAI says it makes use of a mixture of human reviewers and automatic programs to determine and implement in opposition to misuse of its fashions, and points warnings, non permanent suspensions and bans if customers violate the corporate’s insurance policies.

“We take the security of our merchandise severely and are frequently bettering our security measures based mostly on how individuals use our merchandise,” a spokesperson for OpenAI instructed us. “We’re continually working to make our fashions safer and extra strong in opposition to abuse and jailbreaks, whereas additionally sustaining the fashions’ usefulness and process efficiency,” they added.

In a report from February, OpenAI stated it had closed 5 accounts related to state-affiliated malicous actors.

Earlier than, so-called Nigerian prince scams, during which somebody guarantees the sufferer a big sum of cash in alternate for a small up-front cost, have been comparatively simple to identify as a result of the English within the messages was clumsy and riddled with grammatical errors, Ciancaglini. says. Language fashions permit scammers to generate messages that sound like one thing a local speaker would have written.

“English audio system was once comparatively secure from non-English-speaking [criminals] since you might spot their messages,” Ciancaglini says. That’s not the case anymore.

Thanks to raised AI translation, completely different legal teams around the globe can even talk higher with one another. The danger is that they might coordinate large-scale operations that span past their nations and goal victims in different international locations, says Ciancaglini.

Deepfake audio scams

Generative AI has allowed deepfake growth to take a giant leap ahead, with artificial pictures, movies, and audio wanting and sounding extra sensible than ever. This has not gone unnoticed by the legal underworld.

Earlier this yr, an worker in Hong Kong was reportedly scammed out of $25 million after cybercriminals used a deepfake of the corporate’s chief monetary officer to persuade the worker to switch the cash to the scammer’s account. “We’ve seen deepfakes lastly being marketed within the underground,” says Ciancaglini. His workforce discovered individuals on platforms akin to Telegram displaying off their “portfolio” of deepfakes and promoting their providers for as little as $10 per picture or $500 per minute of video. One of the vital fashionable individuals for criminals to deepfake is Elon Musk, says Ciancaglini.

And whereas deepfake movies stay sophisticated to make and simpler for people to identify, that’s not the case for audio deepfakes. They’re low-cost to make and require solely a few seconds of somebody’s voice—taken, for instance, from social media—to generate one thing scarily convincing.

Within the US, there have been high-profile circumstances the place individuals have acquired distressing calls from family members saying they’ve been kidnapped and asking for cash to be freed, just for the caller to grow to be a scammer utilizing a deepfake voice recording.

“Individuals must be conscious that now these items are potential, and folks must be conscious that now the Nigerian king doesn’t converse in damaged English anymore,” says Ciancaglini. “Individuals can name you with one other voice, they usually can put you in a really tense scenario,” he provides.

There are some for individuals to guard themselves, he says. Ciancaglini recommends agreeing on a usually altering secret secure phrase between family members that would assist verify the identification of the individual on the opposite finish of the road.

“I password-protected my grandma,” he says.

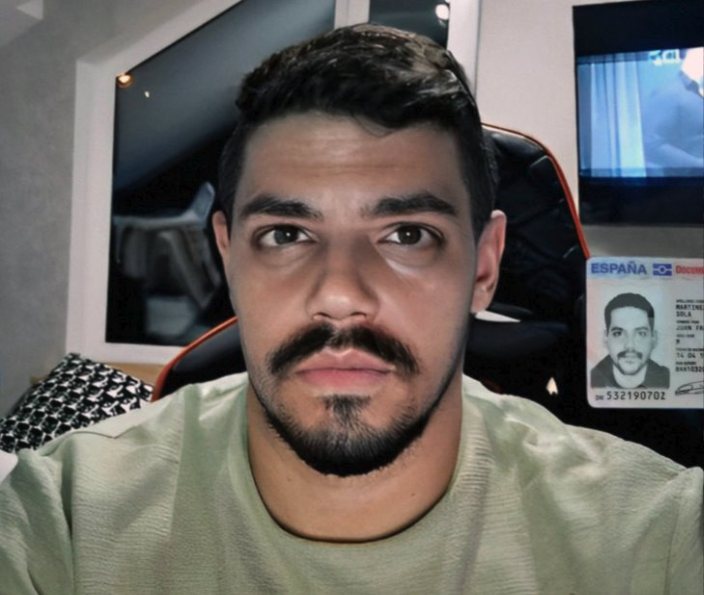

Bypassing identification checks

One other manner criminals are utilizing deepfakes is to bypass “know your buyer” verification programs. Banks and cryptocurrency exchanges use these programs to confirm that their prospects are actual individuals. They require new customers to take a photograph of themselves holding a bodily identification doc in entrance of a digicam. However criminals have began promoting apps on platforms akin to Telegram that permit individuals to get across the requirement.

They work by providing a pretend or stolen ID and imposing a deepfake picture on prime of an actual individual’s face to trick the verification system on an Android cellphone’s digicam. Ciancaglini has discovered examples the place individuals are providing these providers for cryptocurrency web site Binance for as little as $70.

“They’re nonetheless pretty primary,” Ciancaglini says. The methods they use are just like Instagram filters, the place another person’s face is swapped on your personal.

“What we are able to count on sooner or later is that [criminals] will use precise deepfakes … to be able to do extra advanced authentication,” he says.

Jailbreak-as-a-service

When you ask most AI programs the way to make a bomb, you gained’t get a helpful response.

That’s as a result of AI firms have put in place varied safeguards to stop their fashions from spewing dangerous or harmful data. As a substitute of constructing their very own AI fashions with out these safeguards, which is pricey, time-consuming, and tough, cybercriminals have begun to embrace a brand new pattern: jailbreak-as-a-service.

Most fashions include guidelines round how they can be utilized. Jailbreaking permits customers to govern the AI system to generate outputs that violate these insurance policies—for instance, to write down code for ransomware or generate textual content that might be utilized in rip-off emails.

Providers akin to EscapeGPT and BlackhatGPT supply anonymized entry to language-model APIs and jailbreaking prompts that replace steadily. To combat again in opposition to this rising cottage trade, AI firms akin to OpenAI and Google steadily need to plug safety holes that would permit their fashions to be abused.

Jailbreaking providers use completely different tips to interrupt via security mechanisms, akin to posing hypothetical questions or asking questions in international languages. There’s a fixed cat-and-mouse sport between AI firms making an attempt to stop their fashions from misbehaving and malicious actors developing with ever extra inventive jailbreaking prompts.

These providers are hitting the candy spot for criminals, says Ciancaglini.

“Maintaining with jailbreaks is a tedious exercise. You provide you with a brand new one, then you should take a look at it, then it’s going to work for a few weeks, after which Open AI updates their mannequin,” he provides. “Jailbreaking is a super-interesting service for criminals.”

Doxxing and surveillance

AI language fashions are an ideal software for not solely phishing however for doxxing (revealing non-public, figuring out details about somebody on-line), says Balunović. It is because AI language fashions are educated on huge quantities of web knowledge, together with private knowledge, and might deduce the place, for instance, somebody is likely to be positioned.

For example of how this works, you possibly can ask a chatbot to fake to be a personal investigator with expertise in profiling. Then you possibly can ask it to investigate textual content the sufferer has written, and infer private data from small clues in that textual content—for instance, their age based mostly on once they went to highschool, or the place they stay based mostly on landmarks they point out on their commute. The extra data there may be about them on the web, the extra susceptible they’re to being recognized.

Balunović was a part of a workforce of researchers that discovered late final yr that enormous language fashions, akin to GPT-4, Llama 2, and Claude, are capable of infer delicate data akin to individuals’s ethnicity, location, and occupation purely from mundane conversations with a chatbot. In principle, anybody with entry to those fashions might use them this manner.

Since their paper got here out, new providers that exploit this characteristic of language fashions have emerged.

Whereas the existence of those providers doesn’t point out legal exercise, it factors out the brand new capabilities malicious actors might get their fingers on. And if common individuals can construct surveillance instruments like this, state actors in all probability have much better programs, Balunović says.

“The one manner for us to stop these items is to work on defenses,” he says.

Firms ought to spend money on knowledge safety and safety, he provides.

For people, elevated consciousness is essential. Individuals ought to suppose twice about what they share on-line and resolve whether or not they’re comfy with having their private particulars being utilized in language fashions, Balunović says.