In February of final 12 months, the San Francisco–based mostly analysis lab OpenAI introduced that its AI system might now write convincing passages of English. Feed the start of a sentence or paragraph into GPT-2, because it was referred to as, and it might proceed the thought for so long as an essay with virtually human-like coherence.

Now, the lab is exploring what would occur if the identical algorithm had been as an alternative fed a part of a picture. The outcomes, which got an honorable point out for finest paper at this week’s Worldwide Convention on Machine Studying, open up a brand new avenue for picture technology, ripe with alternative and penalties.

At its core, GPT-2 is a robust prediction engine. It realized to know the construction of the English language by billions of examples of phrases, sentences, and paragraphs, scraped from the corners of the web. With that construction, it might then manipulate phrases into new sentences by statistically predicting the order during which they need to seem.

So researchers at OpenAI determined to swap the phrases for pixels and prepare the identical algorithm on photos in ImageNet, the most well-liked picture financial institution for deep studying. As a result of the algorithm was designed to work with one-dimensional information (i.e., strings of textual content), they unfurled the pictures right into a single sequence of pixels. They discovered that the brand new mannequin, named iGPT, was nonetheless capable of grasp the two-dimensional constructions of the visible world. Given the sequence of pixels for the primary half of a picture, it might predict the second half in ways in which a human would deem smart.

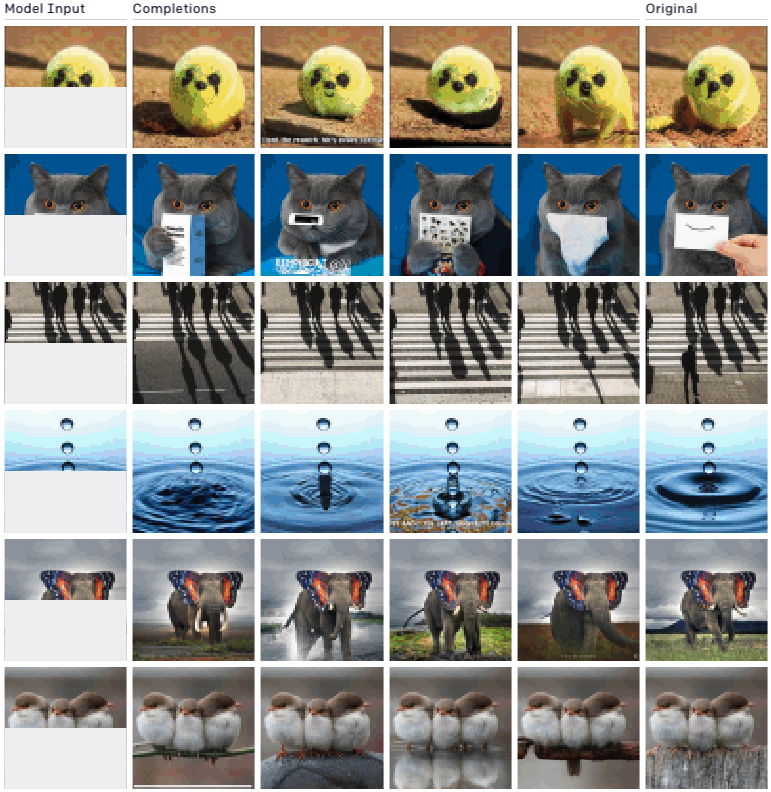

Beneath, you may see a number of examples. The left-most column is the enter, the right-most column is the unique, and the center columns are iGPT’s predicted completions. (See extra examples right here.)

The outcomes are startlingly spectacular and show a brand new path for utilizing unsupervised studying, which trains on unlabeled information, within the improvement of pc imaginative and prescient methods. Whereas early pc imaginative and prescient methods within the mid-2000s trialed such methods earlier than, they fell out of favor as supervised studying, which makes use of labeled information, proved way more profitable. The good thing about unsupervised studying, nevertheless, is that it permits an AI system to be taught in regards to the world with no human filter, and considerably reduces the handbook labor of labeling information.

The truth that iGPT makes use of the identical algorithm as GPT-2 additionally exhibits its promising adaptability. That is in step with OpenAI’s final ambition to realize extra generalizable machine intelligence.

On the similar time, the tactic presents a regarding new strategy to create deepfake photos. Generative adversarial networks, the most typical class of algorithms used to create deepfakes up to now, have to be skilled on extremely curated information. If you wish to get a GAN to generate a face, for instance, its coaching information ought to solely embrace faces. iGPT, in contrast, merely learns sufficient of the construction of the visible world throughout thousands and thousands and billions of examples to spit out photos that would feasibly exist inside it. Whereas coaching the mannequin remains to be computationally costly, providing a pure barrier to its entry, that might not be the case for lengthy.

OpenAI didn’t grant an interview request, however in an inner coverage staff assembly that MIT Know-how Evaluation attended final 12 months, its coverage director, Jack Clark, mused in regards to the future dangers of GPT-style technology, together with what would occur if it had been utilized to photographs. “Video is coming,” he mentioned, projecting the place he noticed the sphere’s analysis trajectory going. “In most likely 5 years, you’ll have conditional video technology over a five- to 10-second horizon.” He then proceeded to explain what he imagined: you’d feed in a photograph of a politician and an explosion subsequent to them, and it will generate a probable output of that politician being killed.

Replace: This text has been up to date to take away the identify of the politician within the hypothetical situation described on the finish.