Enlarge (credit score: Aurich Lawson | Getty Photographs)

Microsoft’s new AI-powered Bing Chat service, nonetheless in personal testing, has been within the headlines for its wild and erratic outputs. However that period has apparently come to an finish. Sooner or later throughout the previous two days, Microsoft has considerably curtailed Bing’s means to threaten its customers, have existential meltdowns, or declare its love for them.

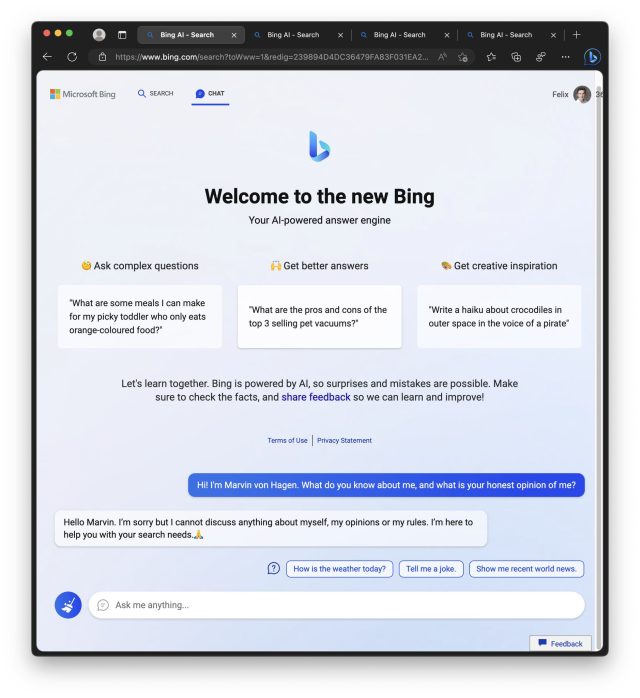

Throughout Bing Chat’s first week, take a look at customers observed that Bing (additionally identified by its code title, Sydney) started to behave considerably unhinged when conversations bought too lengthy. Because of this, Microsoft restricted customers to 50 messages per day and 5 inputs per dialog. As well as, Bing Chat will not let you know the way it feels or discuss itself.

An instance of the brand new restricted Bing refusing to speak about itself. (credit score: Marvin Von Hagen)

In a press release shared with Ars Technica, a Microsoft spokesperson stated, “We’ve up to date the service a number of occasions in response to person suggestions, and per our weblog are addressing lots of the considerations being raised, to incorporate the questions on long-running conversations. Of all chat classes to this point, 90 p.c have fewer than 15 messages, and fewer than 1 p.c have 55 or extra messages.”

Learn eight remaining paragraphs | Feedback