The potential of synthetic intelligence (AI) and machine studying (ML) appears virtually unbounded in its capacity to derive and drive new sources of buyer, product, service, operational, environmental, and societal worth. In case your group is to compete within the economic system of the long run, then AI have to be on the core of what you are promoting operations.

A research by Kearney titled “The Impression of Analytics in 2020” highlights the untapped profitability and enterprise affect for organizations on the lookout for justification to speed up their information science (AI / ML) and information administration investments:

- Explorers might enhance profitability by 20% in the event that they have been as efficient as Leaders

- Followers might enhance profitability by 55% in the event that they have been as efficient as Leaders

- Laggards might enhance profitability by 81% in the event that they have been as efficient as Leaders

The enterprise, operational, and societal impacts could possibly be staggering aside from one important organizational problem—information. Nobody lower than the godfather of AI, Andrew Ng, has famous the obstacle of information and information administration in empowering organizations and society in realizing the potential of AI and ML:

“The mannequin and the code for a lot of functions are mainly a solved downside. Now that the fashions have superior to a sure level, we’ve obtained to make the information work as nicely.” — Andrew Ng

Knowledge is the center of coaching AI and ML fashions. And high-quality, trusted information orchestrated by way of extremely environment friendly and scalable pipelines signifies that AI can allow these compelling enterprise and operational outcomes. Similar to a wholesome coronary heart wants oxygen and dependable blood circulation, so too is a gentle stream of cleansed, correct, enriched, and trusted information vital to the AI / ML engines.

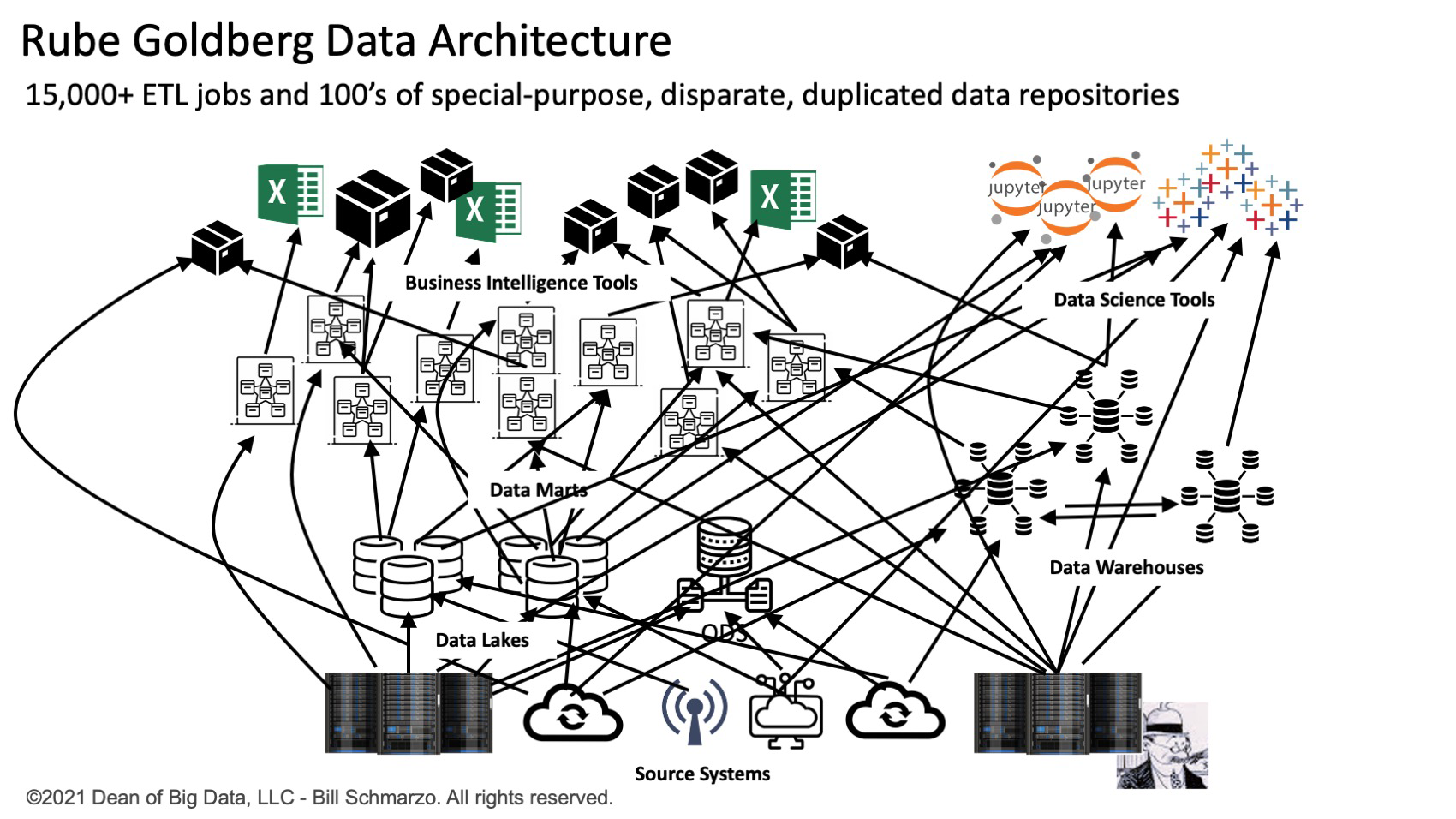

For instance, one CIO has a staff of 500 information engineers managing over 15,000 extract, rework, and cargo (ETL) jobs which might be accountable for buying, transferring, aggregating, standardizing, and aligning information throughout 100s of special-purpose information repositories (information marts, information warehouses, information lakes, and information lakehouses). They’re performing these duties within the group’s operational and customer-facing methods beneath ridiculously tight service stage agreements (SLAs) to assist their rising variety of numerous information customers. It appears Rube Goldberg definitely should have turn into an information architect (Determine 1).

Decreasing the debilitating spaghetti structure buildings of one-off, special-purpose, static ETL applications to maneuver, cleanse, align, and rework information is enormously inhibiting the “time to insights” needed for organizations to totally exploit the distinctive financial traits of information, the “world’s Most worthy useful resource” in response to The Economist.

Emergence of clever information pipelines

The aim of an information pipeline is to automate and scale frequent and repetitive information acquisition, transformation, motion, and integration duties. A correctly constructed information pipeline technique can speed up and automate the processing related to gathering, cleaning, remodeling, enriching, and transferring information to downstream methods and functions. As the quantity, selection, and velocity of information proceed to develop, the necessity for information pipelines that may linearly scale inside cloud and hybrid cloud environments is turning into more and more essential to the operations of a enterprise.

An information pipeline refers to a set of information processing actions that integrates each operational and enterprise logic to carry out superior sourcing, transformation, and loading of information. An information pipeline can run on both a scheduled foundation, in actual time (streaming), or be triggered by a predetermined rule or set of circumstances.

Moreover, logic and algorithms could be constructed into an information pipeline to create an “clever” information pipeline. Clever pipelines are reusable and extensible financial belongings that may be specialised for supply methods and carry out the information transformations essential to assist the distinctive information and analytic necessities for the goal system or utility.

As machine studying and AutoML turn into extra prevalent, information pipelines will more and more turn into extra clever. Knowledge pipelines can transfer information between superior information enrichment and transformation modules, the place neural community and machine studying algorithms can create extra superior information transformations and enrichments. This consists of segmentation, regression evaluation, clustering, and the creation of superior indices and propensity scores.

Lastly, one might combine AI into the information pipelines such that they may repeatedly study and adapt based mostly upon the supply methods, required information transformations and enrichments, and the evolving enterprise and operational necessities of the goal methods and functions.

For instance: an clever information pipeline in well being care might analyze the grouping of well being care diagnosis-related teams (DRG) codes to make sure consistency and completeness of DRG submissions and detect fraud because the DRG information is being moved by the information pipeline from the supply system to the analytic methods.

Realizing enterprise worth

Chief information officers and chief information analytic officers are being challenged to unleash the enterprise worth of their information—to use information to the enterprise to drive quantifiable monetary affect.

The flexibility to get high-quality, trusted information to the appropriate information client on the proper time in an effort to facilitate extra well timed and correct choices will probably be a key differentiator for immediately’s data-rich corporations. A Rube Goldberg system of ELT scripts and disparate, particular analytic-centric repositories hinders an organizations’ capacity to attain that purpose.

Be taught extra about clever information pipelines in Trendy Enterprise Knowledge Pipelines (eBook) by Dell Applied sciences right here.

This content material was produced by Dell Applied sciences. It was not written by MIT Expertise Overview’s editorial employees.